3D Semantic Label with Limited Annotations Benchmark

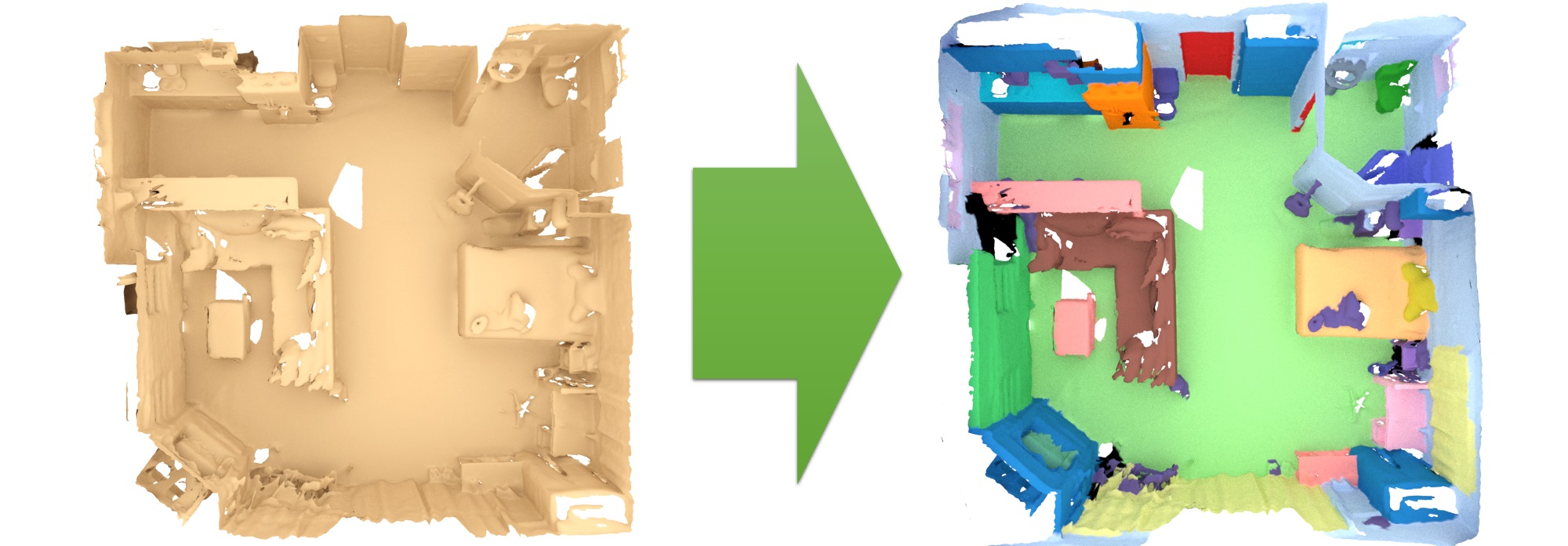

The 3D semantic labeling task involves predicting a semantic labeling of a 3D scan mesh.

Evaluation and metricsOur evaluation ranks all methods according to the PASCAL VOC intersection-over-union metric (IoU). IoU = TP/(TP+FP+FN), where TP, FP, and FN are the numbers of true positive, false positive, and false negative pixels, respectively. Predicted labels are evaluated per-vertex over the respective 3D scan mesh; for 3D approaches that operate on other representations like grids or points, the predicted labels should be mapped onto the mesh vertices (e.g., one such example for grid to mesh vertices is provided in the evaluation helpers).

This table lists the benchmark results for the 3D semantic label with limited annotations scenario.

| Method | Info | avg iou | bathtub | bed | bookshelf | cabinet | chair | counter | curtain | desk | door | floor | otherfurniture | picture | refrigerator | shower curtain | sink | sofa | table | toilet | wall | window |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Q2E | 0.721 1 | 0.984 1 | 0.785 1 | 0.684 2 | 0.693 2 | 0.879 1 | 0.563 1 | 0.822 1 | 0.640 1 | 0.659 1 | 0.965 2 | 0.493 1 | 0.147 5 | 0.711 1 | 0.866 1 | 0.631 3 | 0.797 1 | 0.663 1 | 0.932 2 | 0.849 1 | 0.660 1 | |

| ActiveST | 0.703 2 | 0.977 2 | 0.776 3 | 0.657 5 | 0.707 1 | 0.874 2 | 0.541 2 | 0.744 2 | 0.605 2 | 0.610 2 | 0.968 1 | 0.442 4 | 0.126 6 | 0.705 2 | 0.785 2 | 0.742 1 | 0.791 2 | 0.586 2 | 0.940 1 | 0.839 2 | 0.645 2 | |

| Gengxin Liu, Oliver van Kaick, Hui Huang, Ruizhen Hu: Active Self-Training for Weakly Supervised 3D Scene Semantic Segmentation. | ||||||||||||||||||||||

| One-Thing-One-Click | 0.594 7 | 0.756 6 | 0.722 7 | 0.494 12 | 0.546 8 | 0.795 7 | 0.371 6 | 0.725 6 | 0.559 4 | 0.488 7 | 0.957 3 | 0.367 8 | 0.261 2 | 0.547 4 | 0.575 12 | 0.225 12 | 0.671 10 | 0.543 6 | 0.904 5 | 0.826 3 | 0.557 6 | |

| Zhengzhe Liu, Xiaojuan Qi, Chi-Wing Fu: One Thing One Click: A Self-Training Approach for Weakly Supervised 3D Semantic Segmentation. CVPR 2021 | ||||||||||||||||||||||

| GaIA | 0.638 5 | 0.536 12 | 0.783 2 | 0.651 6 | 0.600 5 | 0.840 3 | 0.413 5 | 0.728 4 | 0.490 7 | 0.520 6 | 0.948 4 | 0.475 2 | 0.299 1 | 0.518 6 | 0.680 4 | 0.629 4 | 0.729 6 | 0.573 4 | 0.906 3 | 0.815 4 | 0.626 3 | |

| Min Seok Lee*, Seok Woo Yang*, and Sung Won Han: GaIA: Graphical Information gain based Attention Network for Weakly Supervised 3D Point Cloud Semantic Segmentation. WACV 2023 | ||||||||||||||||||||||

| DE-3DLearner LA | 0.639 4 | 0.839 3 | 0.723 6 | 0.681 3 | 0.629 4 | 0.839 5 | 0.424 4 | 0.728 4 | 0.538 5 | 0.526 4 | 0.945 6 | 0.427 6 | 0.120 7 | 0.511 7 | 0.643 6 | 0.547 6 | 0.781 3 | 0.566 5 | 0.905 4 | 0.809 5 | 0.607 4 | |

| Ping-Chung Yu, Cheng Sun, Min Sun: Data Efficient 3D Learner via Knowledge Transferred from 2D Model. ECCV 2022 | ||||||||||||||||||||||

| WS3D_LA_Sem | 0.662 3 | 0.812 4 | 0.762 4 | 0.742 1 | 0.635 3 | 0.828 6 | 0.474 3 | 0.736 3 | 0.588 3 | 0.546 3 | 0.947 5 | 0.450 3 | 0.174 4 | 0.536 5 | 0.752 3 | 0.668 2 | 0.735 5 | 0.583 3 | 0.902 6 | 0.797 6 | 0.573 5 | |

| Kangcheng Liu: WS3D: Weakly Supervised 3D Scene Segmentation with Region-Level Boundary Awareness and Instance Discrimination. European Conference on Computer Vision (ECCV), 2022 | ||||||||||||||||||||||

| LE | 0.608 6 | 0.791 5 | 0.726 5 | 0.651 6 | 0.589 6 | 0.779 9 | 0.346 9 | 0.662 8 | 0.493 6 | 0.524 5 | 0.923 13 | 0.430 5 | 0.234 3 | 0.572 3 | 0.638 7 | 0.411 10 | 0.708 7 | 0.533 8 | 0.855 7 | 0.782 7 | 0.508 7 | |

| VIBUS | 0.586 8 | 0.736 8 | 0.623 11 | 0.664 4 | 0.559 7 | 0.840 3 | 0.358 7 | 0.666 7 | 0.447 8 | 0.429 10 | 0.944 7 | 0.421 7 | 0.000 13 | 0.411 9 | 0.629 8 | 0.614 5 | 0.745 4 | 0.541 7 | 0.848 9 | 0.758 8 | 0.493 8 | |

| Beiwen Tian,Liyi Luo,Hao Zhao,Guyue Zhou: VIBUS: Data-efficient 3D Scene Parsing with VIewpoint Bottleneck and Uncertainty-Spectrum Modeling. ISPRS Journal of Photogrammetry and Remote Sensing | ||||||||||||||||||||||

| PointContrast_LA_SEM | 0.550 9 | 0.735 9 | 0.676 8 | 0.601 9 | 0.475 9 | 0.794 8 | 0.288 11 | 0.621 10 | 0.378 12 | 0.430 9 | 0.940 8 | 0.303 10 | 0.089 10 | 0.379 10 | 0.580 11 | 0.531 7 | 0.689 9 | 0.422 11 | 0.852 8 | 0.758 8 | 0.468 9 | |

| CSC_LA_SEM | 0.531 11 | 0.659 10 | 0.638 10 | 0.578 10 | 0.417 11 | 0.775 10 | 0.254 12 | 0.537 11 | 0.396 10 | 0.439 8 | 0.939 10 | 0.284 12 | 0.083 11 | 0.414 8 | 0.599 10 | 0.488 9 | 0.698 8 | 0.444 10 | 0.785 11 | 0.747 10 | 0.440 10 | |

| Viewpoint_BN_LA_AIR | 0.548 10 | 0.747 7 | 0.574 13 | 0.631 8 | 0.456 10 | 0.762 11 | 0.355 8 | 0.639 9 | 0.412 9 | 0.404 11 | 0.940 8 | 0.335 9 | 0.107 8 | 0.277 12 | 0.645 5 | 0.495 8 | 0.666 11 | 0.517 9 | 0.818 10 | 0.740 11 | 0.431 11 | |

| Liyi Luo, Beiwen Tian, Hao Zhao, Guyue Zhou: Pointly-supervised 3D Scene Parsing with Viewpoint Bottleneck. | ||||||||||||||||||||||

| Scratch_LA_SEM | 0.382 13 | 0.389 13 | 0.606 12 | 0.401 13 | 0.303 13 | 0.705 13 | 0.169 13 | 0.460 13 | 0.292 13 | 0.282 13 | 0.939 10 | 0.207 13 | 0.004 12 | 0.147 13 | 0.201 13 | 0.184 13 | 0.592 13 | 0.389 13 | 0.409 13 | 0.714 12 | 0.250 13 | |

| SQN_LA | 0.486 12 | 0.587 11 | 0.649 9 | 0.527 11 | 0.372 12 | 0.718 12 | 0.320 10 | 0.510 12 | 0.393 11 | 0.325 12 | 0.924 12 | 0.290 11 | 0.095 9 | 0.287 11 | 0.607 9 | 0.356 11 | 0.626 12 | 0.416 12 | 0.672 12 | 0.680 13 | 0.359 12 | |