3D Semantic Label with Limited Annotations Benchmark

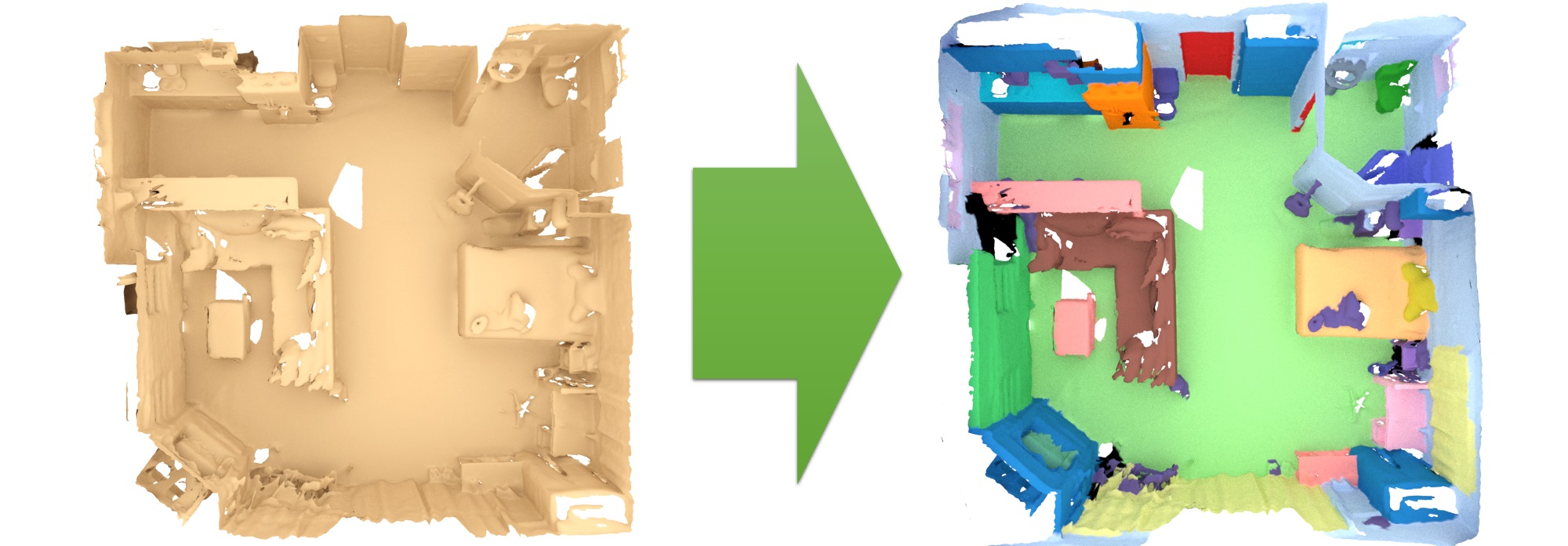

The 3D semantic labeling task involves predicting a semantic labeling of a 3D scan mesh.

Evaluation and metricsOur evaluation ranks all methods according to the PASCAL VOC intersection-over-union metric (IoU). IoU = TP/(TP+FP+FN), where TP, FP, and FN are the numbers of true positive, false positive, and false negative pixels, respectively. Predicted labels are evaluated per-vertex over the respective 3D scan mesh; for 3D approaches that operate on other representations like grids or points, the predicted labels should be mapped onto the mesh vertices (e.g., one such example for grid to mesh vertices is provided in the evaluation helpers).

This table lists the benchmark results for the 3D semantic label with limited annotations scenario.

| Method | Info | avg iou | bathtub | bed | bookshelf | cabinet | chair | counter | curtain | desk | door | floor | otherfurniture | picture | refrigerator | shower curtain | sink | sofa | table | toilet | wall | window |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DE-3DLearner LA | 0.695 3 | 0.897 3 | 0.784 2 | 0.728 5 | 0.697 3 | 0.846 4 | 0.441 7 | 0.770 3 | 0.615 2 | 0.585 3 | 0.951 4 | 0.504 1 | 0.232 4 | 0.672 3 | 0.760 4 | 0.655 4 | 0.772 5 | 0.599 3 | 0.877 7 | 0.834 4 | 0.678 2 | |

| Ping-Chung Yu, Cheng Sun, Min Sun: Data Efficient 3D Learner via Knowledge Transferred from 2D Model. ECCV 2022 | ||||||||||||||||||||||

| Q2E | 0.739 1 | 0.984 1 | 0.797 1 | 0.761 2 | 0.716 1 | 0.884 1 | 0.588 1 | 0.843 1 | 0.589 3 | 0.656 1 | 0.971 2 | 0.487 2 | 0.271 2 | 0.772 1 | 0.807 2 | 0.726 2 | 0.795 2 | 0.630 1 | 0.945 2 | 0.856 1 | 0.693 1 | |

| GaIA | 0.643 7 | 0.704 11 | 0.776 3 | 0.670 10 | 0.597 9 | 0.842 5 | 0.382 9 | 0.688 9 | 0.413 12 | 0.556 4 | 0.950 5 | 0.471 3 | 0.334 1 | 0.478 10 | 0.728 6 | 0.640 5 | 0.787 4 | 0.557 7 | 0.937 3 | 0.812 6 | 0.531 11 | |

| Min Seok Lee*, Seok Woo Yang*, and Sung Won Han: GaIA: Graphical Information gain based Attention Network for Weakly Supervised 3D Point Cloud Semantic Segmentation. WACV 2023 | ||||||||||||||||||||||

| VIBUS | 0.651 6 | 0.868 4 | 0.728 11 | 0.675 9 | 0.624 7 | 0.861 3 | 0.247 13 | 0.734 6 | 0.561 5 | 0.520 8 | 0.948 6 | 0.464 4 | 0.216 6 | 0.670 4 | 0.742 5 | 0.589 9 | 0.746 7 | 0.579 4 | 0.877 7 | 0.800 7 | 0.568 6 | |

| Beiwen Tian,Liyi Luo,Hao Zhao,Guyue Zhou: VIBUS: Data-efficient 3D Scene Parsing with VIewpoint Bottleneck and Uncertainty-Spectrum Modeling. ISPRS Journal of Photogrammetry and Remote Sensing | ||||||||||||||||||||||

| One-Thing-One-Click | 0.642 8 | 0.725 10 | 0.735 7 | 0.717 6 | 0.635 6 | 0.829 6 | 0.457 5 | 0.639 11 | 0.421 11 | 0.552 6 | 0.967 3 | 0.460 5 | 0.240 3 | 0.558 7 | 0.788 3 | 0.621 6 | 0.720 8 | 0.477 11 | 0.915 5 | 0.842 3 | 0.539 8 | |

| Zhengzhe Liu, Xiaojuan Qi, Chi-Wing Fu: One Thing One Click: A Self-Training Approach for Weakly Supervised 3D Semantic Segmentation. CVPR 2021 | ||||||||||||||||||||||

| ActiveST | 0.725 2 | 0.980 2 | 0.764 4 | 0.753 3 | 0.699 2 | 0.863 2 | 0.521 2 | 0.773 2 | 0.671 1 | 0.625 2 | 0.974 1 | 0.456 6 | 0.182 9 | 0.721 2 | 0.874 1 | 0.746 1 | 0.808 1 | 0.628 2 | 0.960 1 | 0.846 2 | 0.664 3 | |

| Gengxin Liu, Oliver van Kaick, Hui Huang, Ruizhen Hu: Active Self-Training for Weakly Supervised 3D Scene Semantic Segmentation. | ||||||||||||||||||||||

| LE | 0.652 5 | 0.816 7 | 0.760 5 | 0.747 4 | 0.648 5 | 0.807 8 | 0.455 6 | 0.765 4 | 0.517 7 | 0.523 7 | 0.941 11 | 0.452 7 | 0.190 8 | 0.586 5 | 0.691 7 | 0.525 11 | 0.762 6 | 0.552 8 | 0.930 4 | 0.795 9 | 0.580 4 | |

| WS3D_LA_Sem | 0.670 4 | 0.842 6 | 0.732 8 | 0.825 1 | 0.657 4 | 0.794 10 | 0.506 3 | 0.762 5 | 0.584 4 | 0.553 5 | 0.947 7 | 0.451 8 | 0.219 5 | 0.585 6 | 0.652 8 | 0.670 3 | 0.791 3 | 0.570 5 | 0.857 11 | 0.816 5 | 0.579 5 | |

| Kangcheng Liu: WS3D: Weakly Supervised 3D Scene Segmentation with Region-Level Boundary Awareness and Instance Discrimination. European Conference on Computer Vision (ECCV), 2022 | ||||||||||||||||||||||

| Viewpoint_BN_LA_AIR | 0.623 9 | 0.812 8 | 0.743 6 | 0.654 11 | 0.579 11 | 0.800 9 | 0.462 4 | 0.713 7 | 0.533 6 | 0.516 9 | 0.944 8 | 0.434 9 | 0.215 7 | 0.437 11 | 0.521 12 | 0.601 7 | 0.720 8 | 0.563 6 | 0.884 6 | 0.800 7 | 0.534 10 | |

| Liyi Luo, Beiwen Tian, Hao Zhao, Guyue Zhou: Pointly-supervised 3D Scene Parsing with Viewpoint Bottleneck. | ||||||||||||||||||||||

| CSC_LA_SEM | 0.612 11 | 0.747 9 | 0.731 9 | 0.679 8 | 0.603 8 | 0.815 7 | 0.400 8 | 0.648 10 | 0.453 9 | 0.481 11 | 0.944 8 | 0.421 10 | 0.173 10 | 0.504 8 | 0.623 10 | 0.588 10 | 0.690 12 | 0.545 9 | 0.877 7 | 0.778 11 | 0.541 7 | |

| PointContrast_LA_SEM | 0.614 10 | 0.844 5 | 0.731 9 | 0.681 7 | 0.590 10 | 0.791 11 | 0.348 11 | 0.689 8 | 0.503 8 | 0.502 10 | 0.942 10 | 0.361 11 | 0.154 12 | 0.484 9 | 0.624 9 | 0.591 8 | 0.708 10 | 0.524 10 | 0.874 10 | 0.793 10 | 0.536 9 | |

| SQN_LA | 0.542 12 | 0.568 13 | 0.674 13 | 0.618 13 | 0.462 12 | 0.772 12 | 0.351 10 | 0.567 12 | 0.443 10 | 0.378 13 | 0.931 13 | 0.335 12 | 0.173 10 | 0.392 12 | 0.623 10 | 0.455 13 | 0.688 13 | 0.466 12 | 0.769 13 | 0.720 13 | 0.450 12 | |

| Scratch_LA_SEM | 0.524 13 | 0.640 12 | 0.690 12 | 0.636 12 | 0.442 13 | 0.756 13 | 0.326 12 | 0.544 13 | 0.365 13 | 0.396 12 | 0.940 12 | 0.284 13 | 0.085 13 | 0.333 13 | 0.479 13 | 0.502 12 | 0.696 11 | 0.453 13 | 0.785 12 | 0.746 12 | 0.372 13 | |