3D Semantic Label with Limited Reconstructions Benchmark

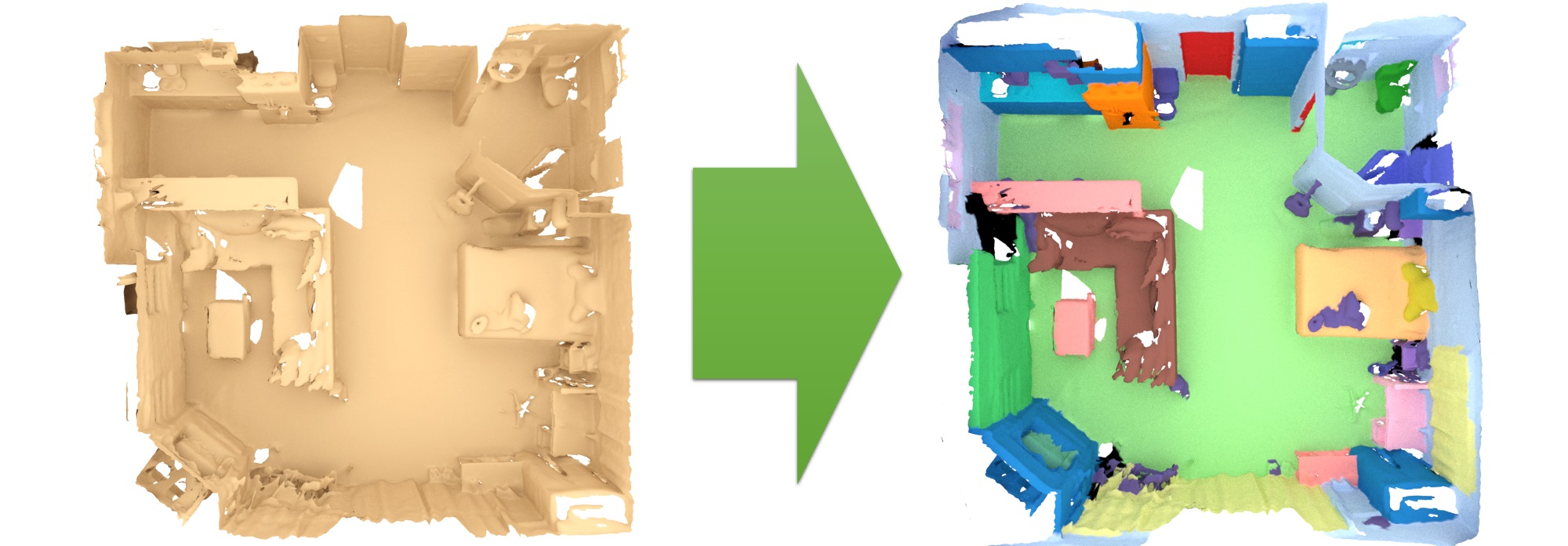

The 3D semantic labeling task involves predicting a semantic labeling of a 3D scan mesh.

Evaluation and metricsOur evaluation ranks all methods according to the PASCAL VOC intersection-over-union metric (IoU). IoU = TP/(TP+FP+FN), where TP, FP, and FN are the numbers of true positive, false positive, and false negative pixels, respectively. Predicted labels are evaluated per-vertex over the respective 3D scan mesh; for 3D approaches that operate on other representations like grids or points, the predicted labels should be mapped onto the mesh vertices (e.g., one such example for grid to mesh vertices is provided in the evaluation helpers).

This table lists the benchmark results for the 3D semantic label with limited reconstructions scenario.

| Method | Info | avg iou | bathtub | bed | bookshelf | cabinet | chair | counter | curtain | desk | door | floor | otherfurniture | picture | refrigerator | shower curtain | sink | sofa | table | toilet | wall | window |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WS3D_LR_Sem | 0.682 1 | 0.863 1 | 0.765 2 | 0.782 1 | 0.648 1 | 0.803 7 | 0.438 3 | 0.793 1 | 0.607 1 | 0.589 1 | 0.944 3 | 0.455 1 | 0.223 2 | 0.536 2 | 0.768 1 | 0.726 1 | 0.758 2 | 0.623 1 | 0.906 1 | 0.821 2 | 0.596 3 | |

| Kangcheng Liu: WS3D: Weakly Supervised 3D Scene Segmentation with Region-Level Boundary Awareness and Instance Discrimination. European Conference on Computer Vision (ECCV), 2022 | ||||||||||||||||||||||

| NWSYY | 0.678 2 | 0.779 4 | 0.782 1 | 0.774 2 | 0.637 2 | 0.827 4 | 0.491 1 | 0.736 2 | 0.597 2 | 0.561 2 | 0.947 2 | 0.438 2 | 0.206 3 | 0.610 1 | 0.758 2 | 0.667 2 | 0.773 1 | 0.594 3 | 0.880 2 | 0.824 1 | 0.673 1 | |

| CSC_LR_SEM | 0.575 4 | 0.671 8 | 0.740 3 | 0.727 3 | 0.445 6 | 0.847 1 | 0.380 7 | 0.602 5 | 0.512 5 | 0.447 5 | 0.942 4 | 0.291 5 | 0.184 4 | 0.353 8 | 0.468 8 | 0.508 6 | 0.745 4 | 0.602 2 | 0.855 3 | 0.765 5 | 0.420 8 | |

| CSG_3DSegNet | 0.570 5 | 0.717 6 | 0.730 4 | 0.697 4 | 0.521 3 | 0.823 5 | 0.377 8 | 0.419 8 | 0.531 3 | 0.452 4 | 0.935 8 | 0.316 3 | 0.147 5 | 0.359 7 | 0.551 7 | 0.551 4 | 0.692 7 | 0.513 5 | 0.797 6 | 0.764 6 | 0.508 4 | |

| Viewpoint_BN_LR_AIR | 0.566 6 | 0.780 3 | 0.659 8 | 0.677 5 | 0.484 4 | 0.799 8 | 0.419 5 | 0.636 4 | 0.480 6 | 0.432 7 | 0.940 5 | 0.238 8 | 0.124 6 | 0.396 5 | 0.609 3 | 0.432 8 | 0.735 5 | 0.527 4 | 0.787 7 | 0.752 8 | 0.423 7 | |

| PointContrast_LR_SEM | 0.555 7 | 0.711 7 | 0.668 6 | 0.622 6 | 0.425 7 | 0.830 2 | 0.433 4 | 0.552 6 | 0.273 8 | 0.440 6 | 0.938 6 | 0.287 7 | 0.096 7 | 0.470 3 | 0.576 5 | 0.612 3 | 0.687 8 | 0.438 8 | 0.781 8 | 0.785 4 | 0.474 5 | |

| DE-3DLearner LR | 0.608 3 | 0.853 2 | 0.689 5 | 0.593 7 | 0.483 5 | 0.830 2 | 0.466 2 | 0.652 3 | 0.528 4 | 0.482 3 | 0.954 1 | 0.288 6 | 0.250 1 | 0.448 4 | 0.595 4 | 0.532 5 | 0.748 3 | 0.503 6 | 0.822 4 | 0.806 3 | 0.647 2 | |

| Ping-Chung Yu, Cheng Sun, Min Sun: Data Efficient 3D Learner via Knowledge Transferred from 2D Model. ECCV 2022 | ||||||||||||||||||||||

| Scratch_LR_SEM | 0.531 8 | 0.750 5 | 0.666 7 | 0.553 8 | 0.409 8 | 0.816 6 | 0.387 6 | 0.487 7 | 0.285 7 | 0.368 8 | 0.938 6 | 0.310 4 | 0.074 8 | 0.388 6 | 0.564 6 | 0.468 7 | 0.698 6 | 0.448 7 | 0.804 5 | 0.761 7 | 0.454 6 | |