Data

If you want to participate to this benchmark, you can download the benchmark data for the localization task here. Learn more about the ScanRefer project at our project website.

General Info

Code page: Detailed information about our dataset and file formats is provided on our github page, please see our git repo.

Download ScanRefer dataset: If you would like to download the public ScanRefer dataset (for training purposes), please fill out the ScanRefer Terms of Use Form. Once your request is accepted, you will receive an email with the download link.

Documentation

Challenge Guidelines

Guidelines for Localization Challenge

Localization Metrics

Evaluation Break-down

Submission Format

Guidelines for Dense Captioning Challenge

Dense Captioning Metrics

Captioning F1-Score

Dense Captioning Mean Average Precision

Object Detection Mean Average Precision

Submission Format

Submission Policy

Please note that for every user is allowed to submit the test set results of each method for only twice.

The data in the ScanRefer dataset releases may be used for learning the parameters of the algorithms. The test data should be used strictly for reporting the final results -- this benchmark is not meant for iterative testing sessions or parameter tweaking.

Parameter tuning is only allowed on the training data. Evaluating on the test data via this evaluation server must only be done once for the final system. It is not permitted to use it to train systems, for example by trying out different parameter values and choosing the best. Only one version must be evaluated (which performed best on the training data). This is to avoid overfitting on the test data. Results of different parameter settings of an algorithm can therefore only be reported on the training set. To help enforcing this policy, we block updates to the test set results of a method for two weeks after a test set submission. You can split up the training data into training and validation sets yourself as you wish.

It is not permitted to register on this webpage with multiple e-mail addresses. We will ban users or domains if required.

Challenge Guidelines

Results for a method must be uploaded as a single .zip or .7z file, which when unzipped must contain a single .json file. In other words: There must not be any additional files or folders in the archive except your predictions.

Guidelines for Localization Challenge

Localization Metrics

Acc@kIoUTo evaluate the performance of our method, we measure the thresholded accuracy where the positive predictions have higher intersection over union (IoU) with the ground truths than the thresholds. Similar to work with 2D images, we use Acc@kIoU as our metric, where the threshold value k for IoU is set to 0.25 and 0.5 in our experiments.

Evaluation Break-down

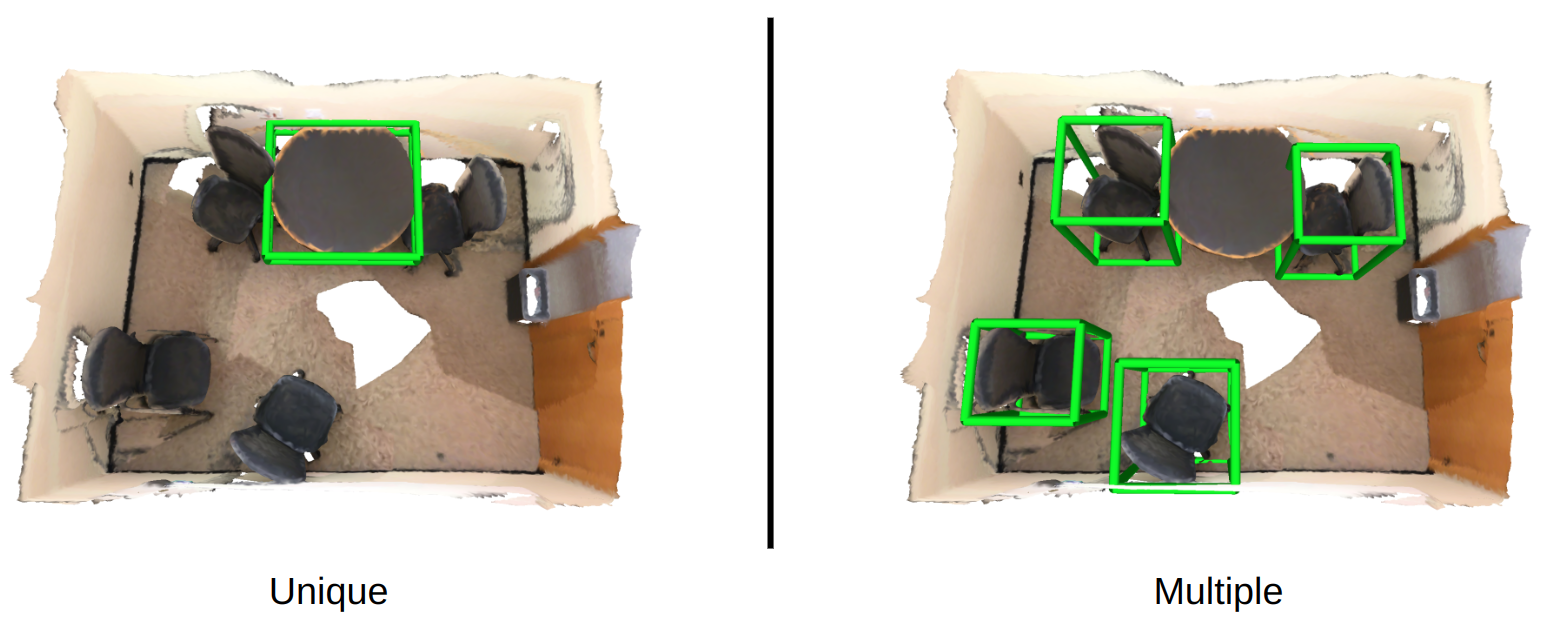

To understand how informative the input description is beyond capturing the object category, we analyze the performance of the methods on “unique” and “multiple” subsets from test split, respectively. The “unique” subset contains samples where only one unique object from a certain category matches the description, while the “multiple” subset contains ambiguous cases where there are multiple objects of the same category.

Submission Format

The localization results for ScanRefer challenge should be saved as a JSON file, and must be parsable to a list of Python dictionaries. Each prediction of this list should include these fields: "scene_id", "object_id", "ann_id", "bbox".

E.g., a prediction JSON could look like:

[

...

{

"scene_id": # ScanNet scene ID

"object_id": # ScanNet object ID

"ann_id": # ScanRefer annotation ID

"bbox": # predicted bounding box of shape (8, 3)

}

...

]

You can generate the JSON file containing all necessary data for submission using this script with minor tweaks.

Guidelines for Dense Captioning Challenge

Dense Captioning Metrics

Captioning F1-Score

To jointly measure the quality of the generated description

and the detected bounding boxes, we evaluate the descriptions

by combining standard image captioning metrics such as CIDEr and BLEU, with

Intersection-over-Union (IoU) scores between predicted

bounding boxes and the matched ground truth bounding boxes.

For \(N^{\text{pred}}\) predicted bounding boxes and \(N^{\text{GT}}\) ground truth bounding boxes,

we define the captioning precision \(M^{\text{P}}@k\text{IoU}\) as:

\[M^{\text{P}}@k\text{IoU} = \frac{1}{N^{\text{pred}}} \sum^{N^{\text{pred}}}_{i=1} m_i u_i \]

\(u_i \in \{0, 1\}\) is set to 1 if the IoU score for the \(i^{th}\) box is greater than k, otherwise 0.

We use \(m\) to represent the captioning metrics CIDEr, BLEU, METEOR and ROUGE.

Similarly, we define the captioning recall \(M^{\text{R}}@k\text{IoU}\) as:

\[M^{\text{R}}@k\text{IoU} = \frac{1}{N^{\text{GT}}} \sum^{N^{\text{GT}}}_{i=1} m_i u_i \]

Finally, we combine the captioning precision and recall as the captioning f1-score as the final metric for captioning:

\[M^{\text{F1}}@k\text{IoU} = \frac{2 \times M^{\text{P}}@k\text{IoU} \times M^{\text{R}}@k\text{IoU} }{M^{\text{P}}@k\text{IoU} + M^{\text{R}}@k\text{IoU}} \]

Inspired by evaluation metrics in dense captioning in images, we propose to measure the mean Average Precision (AP) across a range of thresholds for both localization and language accuracy. For localization we use intersection over union (IoU) thresholds .1, .2, .3, .4, .5. For language we use METEOR score thresholds .15, .3, .45, .6, .75. We adopt METEOR since this metric was found to be most highly correlated with human judgments in settings with a low number of references. We measure the average precision across all pairwise settings of these thresholds and report the mean AP.

Object Detection Mean Average PrecisionWe use the conventional mean average precision (mAP) thresholded by IoU as the object detection metric.

Submission Format

The localization results for ScanRefer challenge should be saved as a JSON file, and must be parsable to a Python dictionary, where the key of the predictions should be ScanNet scene IDs. Each prediction of the scene should be a list of Python dictionaries, containing these fields: "caption", "box", "sem_prob", "obj_prob".

E.g., a prediction JSON could look like:

{

...

"scene_id": { # ScanNet scene ID

"caption": # predicted caption, padded with "sos" (start of sentence) and "eos" (end to sentence)

"box": # predicted bounding box of shape (8, 3)

"sem_prob": # predicted semantic logits of the bounding box of shape (18,)

"obj_prob": # predicted objectness logits of the bounding box of shape (2,)

}

...

}

You can generate the JSON file containing all necessary data for submission using this script with minor tweaks.