Documentation

Class labels and ids

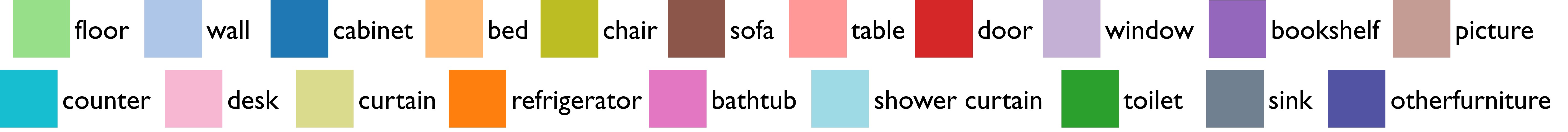

Label IDs for the ScanNet benchmark

Label IDs for the ScanNet200 benchmark

Scene type classification

Submission format

Format for 3D Semantic Label Prediction

Format for 3D Semantic Instance Prediction

Format for 2D Semantic Label Prediction

Format for 2D Semantic Instance Prediction

Format for Scene Type Classification

Submission policy

The 1513 scans of the ScanNet dataset release may be used for learning the parameters of the algorithms. The test data should be used strictly for reporting the final results -- this benchmark is not meant for iterative testing sessions or parameter tweaking.

Parameter tuning is only allowed on the training data. Evaluating on the test data via this evaluation server must only be done once for the final system. It is not permitted to use it to train systems, for example by trying out different parameter values and choosing the best. Only one version must be evaluated (which performed best on the training data). This is to avoid overfitting on the test data. Results of different parameter settings of an algorithm can therefore only be reported on the training set. To help enforcing this policy, we block updates to the test set results of a method for two weeks after a test set submission. You can split up the training data into training and validation sets yourself as you wish.

It is not permitted to register on this webpage with multiple e-mail addresses nor information misrepresenting the identity of the user. We will ban users or domains if required.

Data

Download: If you would like to download the ScanNet data, please fill out an agreement to the ScanNet Terms of Use and send it to us at the scannet group email. For more information regarding the ScanNet dataset, please see our git repo. The ScanNet and ScanNet200 benchmarks are sharing the source data, but different in the annotation parsing. Please refer to the provided preprocessing scripts for ScanNet200.

Tasks Data Requirements: For all tasks, both 2D and 3D data modalities can be used as input.

2D Data: As the number of 2D RGB-D frames is particularly large (≈2.5 million), we provide an option to download a smaller subset

Data Formats

3D Data: 3D data is provided with the RGB-D video sequences (depth-color aligned) as well as reconstructed meshes as

2D Data: 2D label and instance data are provided as

The 2D data provided with the ScanNet release (

- Label images: 16-bit

.png where each pixel stores theid value corresponding fromscannetv2-labels.combined.tsv (0 corresponds to unannotated or no depth). - Instance images: 8-bit

.png where each pixel stores an integer value per distinct instance (0 corresponds to unannotated or no depth).

We also provide a preprocessed subset of the ScanNet frames,

- Label images: 8-bit

.png where each pixel stores thenyu40id value corresponding fromscannetv2-labels.combined.tsv (0 corresponds to unannotated or no depth). - Instance images: 16-bit

.png where each pixel stores an integer value per distinct instance corresponding tolabel*1000+inst , wherelabel is thenyu40id label andinst is a 1-indexed value counting the instances for the respective label in the image. Note that walls, floors, and ceilings do not contain instances, and that 0 corresponds to unannotated or no depth.

Class labels and ids

ScanNet Benchmark

2D/3D semantic label and instance prediction: We use the NYUv2 40-label set, see all label ids here, and evaluate on the 20-class subset defined here. Note that for instance tasks, the

ScanNet200 Benchmark

- This benchmark follows the original train/val/test scene splits published with this dataset and available here

- We further split of the 200 categories into three sets based on their point and instance frequencies, namely head, common, and tail.

The category splits can be found in

scannet200_split.py here - The raw annotations in the training set containing 550 distinct categories, many of which appear only once, and were filtered to produce the large-vocabulary, challenging ScanNet200 setting.

The mapping of annotation category IDs to ScanNet200 valid categories can be found in

scannet200_constants.py here - For preprocessing the raw data and color labels please refer to the repository.

Scene type classification

There are 20 different scene types for the ScanNet dataset, described with ids here. We evaluate on a 13-class subset defined here.

Results for a method must be uploaded as a single .zip or .7z file (7z is preferred due to smaller file sizes), which when unzipped must contain in the root the prediction files. There must not be any additional files or folders in the archive except those specified below.

Format for 3D Semantic Label Prediction

Both for the ScanNet and ScanNet200 3D semantic label prediction task, results must be provided as class labels per vertex of the corresponding 3D scan mesh, i.e., for each vertex in the order provided by the

10

A submission must contain a .txt prediction file image for each test scan, named

10

2

2

2

⋮

39

unzip_root/

|-- scene0707_00.txt

|-- scene0708_00.txt

|-- scene0709_00.txt

⋮

|-- scene0806_00.txt

Format for 3D Semantic Instance Prediction

Results must be provided as a text file for each test scan. Each text file should contain a line for each instance, containing the relative path to a binary mask of the instance, the predicted label id, and the confidence of the prediction. The result text files must be named according to the corresponding test scan, as

unzip_root/

Each prediction file for a scan should contain a list of instances, where an instance is: (1) the relative path to the predicted mask file, (2) the integer class label id, (3) the float confidence score.

Each line in the prediction file should correspond to one instance, and the three values above separated by spaces. Thus, the filenames in the prediction files must not contain spaces.

|-- scene0707_00.txt

|-- scene0708_00.txt

|-- scene0709_00.txt

⋮

|-- scene0806_00.txt

|-- predicted_masks/

|-- scene0707_00_000.txt

|-- scene0707_00_001.txt

⋮

The predicted instance mask file should provide a mask over the vertices of the scan mesh, i.e., for each vertex in the order provided by the

predicted_masks/scene0707_00_000.txt 10 0.7234

and

predicted_masks/scene0707_00_001.txt 36 0.9038

⋮

0

0

0

1

1

⋮

0

Format for 2D Semantic Label Prediction

Results must be provided as 8-bit PNGs, encoding the predicted class labels ids.

A submission must contain a result image for each test image, named

unzip_root/

|-- scene0707_00_000000.png

|-- scene0707_00_000200.png

|-- scene0707_00_000400.png

⋮

Format for 2D Semantic Instance Prediction

Results must be provided as a text file for each test image. Each text file should contain a line for each instance, containing the relative path to a binary mask image of the instance, the predicted label id, and the confidence of the prediction. The result text files must be named according to the corresponding test scan, as

unzip_root/

with

|-- scene0707_00_000000.txt

|-- scene0707_00_000200.txt

|-- scene0707_00_000400.txt

⋮

|-- predicted_masks/

|-- scene0707_00_000000_000.png

|-- scene0707_00_000000_001.png

⋮

predicted_masks/scene0707_00_000000_000.png 33 0.7234

predicted_masks/scene0707_00_000000_001.png 5 0.9038

⋮

Format for Scene Type Classification

Results must be provided as a single text file, containing a line for each scan and its predicted scene type, separated by spaces. For instance, an example submission

scene0707_00 1

scene0708_00 2

scene0709_00 3

⋮

scene0806_00 14