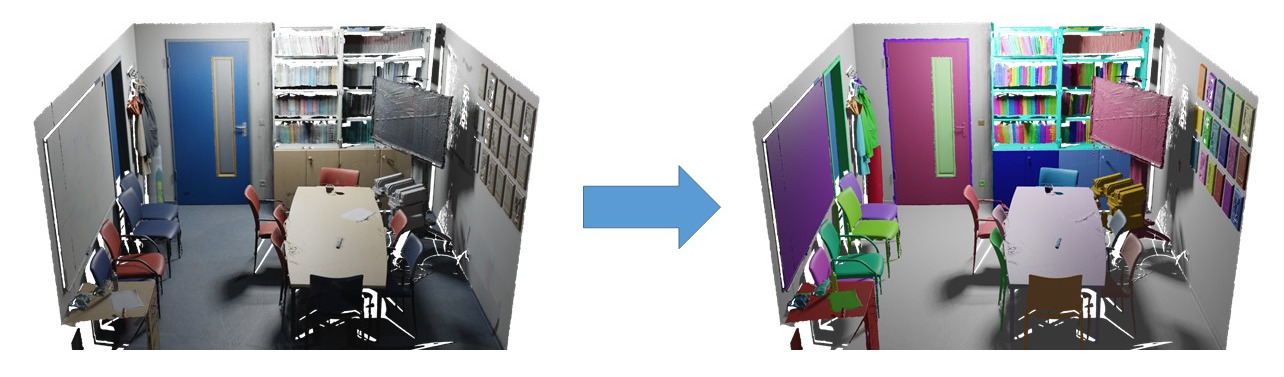

3D Instance Segmentation

This task involves detecting and segmenting the object in an 3D scan mesh, which is obtained from the laser scanner point cloud. Submissions must provide a list of 3D instances with their semantic labels and predicted confidences, as well as the mask indicating the vertices belonging to each instance.

Evaluation and Metrics

The set of instance classes is a subset of the 100 semantic classes that includes only the object classes that are countable.

Predicted labels are evaluated per-vertex over the vertices of 5% decimated 3D scan mesh (mesh_aligned_0.05.ply); for 3D approaches that operate on other representations like grids or points, the predicted labels should be mapped onto the mesh vertices.

Our evaluation ranks all methods according to the average precision for each class. We report the mean average precision AP at overlap 0.25 (AP 25%), overlap 0.5 (AP 50%), and over overlaps in the range [0.5:0.95:0.05] (AP). Note that multiple predictions of the same ground truth instance are penalized as false positives.

Evaluation excludes the vertices which are anonymized. The list of these vertices is in mesh_aligned_0.05_mask.txt

Results

The benchmark is currently evaluated on the v2 version of the dataset.| Methods | MEAN | BACKPACK | BAG | BASKET | BED | BINDER | BLANKET | BLINDS | BOOK | BOOKSHELF | BOTTLE | BOWL | BOX | BUCKET | CABINET | CEILING LAMP | CHAIR | CLOCK | COAT HANGER | COMPUTER TOWER | CONTAINER | CRATE | CUP | CURTAIN | CUSHION | CUTTING BOARD | DOOR | EXHAUST FAN | FILE FOLDER | HEADPHONES | HEATER | JACKET | JAR | KETTLE | KEYBOARD | KITCHEN CABINET | LAPTOP | LIGHT SWITCH | MARKER | MICROWAVE | MONITOR | MOUSE | OFFICE CHAIR | PAINTING | PAN | PAPER BAG | PAPER TOWEL | PICTURE | PILLOW | PLANT | PLANT POT | POSTER | POT | POWER STRIP | PRINTER | RACK | REFRIGERATOR | SHELF | SHOE RACK | SHOES | SINK | SLIPPERS | SMOKE DETECTOR | SOAP DISPENSER | SOCKET | SOFA | SPEAKER | SPRAY BOTTLE | STAPLER | STORAGE CABINET | SUITCASE | TABLE | TABLE LAMP | TAP | TELEPHONE | TISSUE BOX | TOILET | TOILET BRUSH | TOILET PAPER | TOWEL | TRASH CAN | TV | WHITEBOARD | WHITEBOARD ERASER | WINDOW |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LaSSM | 0.480 | 0.686 | 0.389 | 0.000 | 0.694 | 0.000 | 0.417 | 0.762 | 0.122 | 0.567 | 0.266 | 0.000 | 0.290 | 0.269 | 0.516 | 0.887 | 0.782 | 0.833 | 0.600 | 0.633 | 0.000 | 0.117 | 0.521 | 0.808 | 0.120 | 0.000 | 0.832 | 0.676 | 0.000 | 0.375 | 0.857 | 0.447 | 0.000 | 0.589 | 0.844 | 0.388 | 0.688 | 0.616 | 0.000 | 0.858 | 0.878 | 0.861 | 0.971 | 0.005 | 0.000 | 0.000 | 0.204 | 0.275 | 0.511 | 0.777 | 0.385 | 0.013 | 0.000 | 0.460 | 0.350 | 0.016 | 0.833 | 0.202 | 0.166 | 0.397 | 1.000 | 0.230 | 0.879 | 0.666 | 0.515 | 0.953 | 0.254 | 0.023 | 0.239 | 0.543 | 0.595 | 0.700 | 1.000 | 0.940 | 0.785 | 0.095 | 0.909 | 0.776 | 0.129 | 0.380 | 0.865 | 0.988 | 0.901 | 0.616 | 0.580 |

| Lei Yao, Yi Wang, Yawen Cui, Moyun Liu, Lap-Pui Chau. Efficient Semantic-Spatial Query Decoding via Local Aggregation and State Space Models for 3D Instance Segmentation. Under Review | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| SGIFormer | 0.457 | 0.533 | 0.228 | 0.023 | 0.637 | 0.002 | 0.507 | 0.723 | 0.067 | 0.569 | 0.330 | 0.012 | 0.246 | 0.259 | 0.578 | 0.878 | 0.817 | 0.833 | 0.600 | 0.691 | 0.000 | 0.078 | 0.605 | 0.848 | 0.089 | 0.000 | 0.766 | 0.570 | 0.000 | 0.390 | 0.840 | 0.459 | 0.005 | 0.666 | 0.881 | 0.164 | 0.931 | 0.523 | 0.000 | 0.816 | 0.946 | 0.883 | 0.877 | 0.030 | 0.095 | 0.007 | 0.018 | 0.203 | 0.392 | 0.777 | 0.523 | 0.014 | 0.000 | 0.293 | 0.494 | 0.200 | 0.381 | 0.148 | 0.000 | 0.438 | 1.000 | 0.299 | 0.862 | 0.555 | 0.165 | 0.777 | 0.147 | 0.152 | 0.000 | 0.538 | 0.491 | 0.714 | 0.963 | 0.831 | 0.907 | 0.364 | 0.909 | 0.613 | 0.199 | 0.291 | 0.736 | 0.988 | 0.909 | 0.627 | 0.497 |

| L Yao, Y Wang, M Liu, LP Chau. SGIFormer: Semantic-guided and geometric-enhanced interleaving transformer for 3D instance segmentation. IEEE 2024 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| OneFormer3D | 0.433 | 0.717 | 0.138 | 0.000 | 0.326 | 0.002 | 0.529 | 0.741 | 0.100 | 0.505 | 0.375 | 0.301 | 0.252 | 0.378 | 0.498 | 0.873 | 0.885 | 0.555 | 0.400 | 0.654 | 0.000 | 0.009 | 0.580 | 0.697 | 0.040 | 0.000 | 0.789 | 0.480 | 0.000 | 0.116 | 0.763 | 0.319 | 0.002 | 0.629 | 0.868 | 0.253 | 0.954 | 0.502 | 0.000 | 0.964 | 0.917 | 0.872 | 0.956 | 0.090 | 0.023 | 0.057 | 0.027 | 0.119 | 0.342 | 0.777 | 0.368 | 0.071 | 0.000 | 0.170 | 0.253 | 0.025 | 0.645 | 0.121 | 0.000 | 0.608 | 0.882 | 0.187 | 0.879 | 0.666 | 0.164 | 0.583 | 0.018 | 0.011 | 0.016 | 0.671 | 0.137 | 0.642 | 1.000 | 0.801 | 0.800 | 0.322 | 0.909 | 0.800 | 0.054 | 0.273 | 0.734 | 1.000 | 0.945 | 0.727 | 0.545 |

| Maxim Kolodiazhnyi, Anna Vorontsova, Anton Konushin, Danila Rukhovich. OneFormer3D: One Transformer for Unified Point Cloud Segmentation. CVPR 2024 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| SPFormer | 0.435 | 0.446 | 0.084 | 0.003 | 0.442 | 0.000 | 0.345 | 0.734 | 0.047 | 0.521 | 0.343 | 0.250 | 0.236 | 0.143 | 0.489 | 0.848 | 0.862 | 0.666 | 0.265 | 0.597 | 0.000 | 0.020 | 0.633 | 0.761 | 0.190 | 0.000 | 0.685 | 0.533 | 0.000 | 0.400 | 0.851 | 0.406 | 0.007 | 0.246 | 0.872 | 0.274 | 0.501 | 0.507 | 0.012 | 0.985 | 0.920 | 0.892 | 0.931 | 0.090 | 0.142 | 0.023 | 0.000 | 0.223 | 0.403 | 0.777 | 0.214 | 0.072 | 0.000 | 0.219 | 0.344 | 0.200 | 0.833 | 0.176 | 0.000 | 0.508 | 0.899 | 0.125 | 0.829 | 0.649 | 0.136 | 0.944 | 0.018 | 0.005 | 0.000 | 0.562 | 0.378 | 0.612 | 1.000 | 0.900 | 0.834 | 0.371 | 0.909 | 0.963 | 0.132 | 0.328 | 0.712 | 1.000 | 0.853 | 0.751 | 0.464 |

| Jiahao Sun, Chunmei Qing, Junpeng Tan, Xiangmin Xu. Superpoint Transformer for 3D Scene Instance Segmentation. AAAI2023 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| LAFNet-(w/o normal) | 0.452 | 0.572 | 0.108 | 0.000 | 0.513 | 0.000 | 0.505 | 0.741 | 0.073 | 0.477 | 0.313 | 0.162 | 0.238 | 0.352 | 0.517 | 0.781 | 0.905 | 0.717 | 0.600 | 0.591 | 0.000 | 0.032 | 0.492 | 0.572 | 0.039 | 0.020 | 0.854 | 0.500 | 0.000 | 0.108 | 0.909 | 0.539 | 0.000 | 0.589 | 0.911 | 0.169 | 0.925 | 0.493 | 0.000 | 0.625 | 0.942 | 0.799 | 0.926 | 0.000 | 0.000 | 0.008 | 0.091 | 0.312 | 0.375 | 0.865 | 0.409 | 0.016 | 0.000 | 0.365 | 0.327 | 0.020 | 0.833 | 0.338 | 0.166 | 0.410 | 1.000 | 0.344 | 0.844 | 0.666 | 0.134 | 1.000 | 0.029 | 0.002 | 0.215 | 0.568 | 0.218 | 0.684 | 1.000 | 0.881 | 0.815 | 0.454 | 0.909 | 0.864 | 0.100 | 0.273 | 0.760 | 1.000 | 0.904 | 0.572 | 0.618 |

| SPFormer - Pretrained scannet | 0.432 | 0.557 | 0.049 | 0.000 | 0.386 | 0.000 | 0.472 | 0.773 | 0.068 | 0.512 | 0.334 | 0.173 | 0.217 | 0.059 | 0.479 | 0.835 | 0.853 | 0.802 | 0.458 | 0.629 | 0.000 | 0.009 | 0.654 | 0.444 | 0.115 | 0.000 | 0.714 | 0.437 | 0.000 | 0.090 | 0.937 | 0.550 | 0.000 | 0.580 | 0.826 | 0.193 | 0.530 | 0.508 | 0.000 | 0.985 | 0.888 | 0.835 | 0.813 | 0.011 | 0.142 | 0.142 | 0.027 | 0.134 | 0.387 | 0.723 | 0.552 | 0.065 | 0.000 | 0.307 | 0.571 | 0.000 | 0.833 | 0.229 | 0.000 | 0.309 | 0.976 | 0.092 | 0.833 | 0.666 | 0.128 | 0.866 | 0.125 | 0.023 | 0.014 | 0.414 | 0.364 | 0.586 | 0.787 | 0.983 | 0.734 | 0.605 | 0.880 | 0.893 | 0.295 | 0.116 | 0.764 | 1.000 | 0.906 | 0.622 | 0.455 |

| Jiahao Sun, Chunmei Qing, Junpeng Tan, Xiangmin Xu. Superpoint Transformer for 3D Scene Instance Segmentation. AAAI2023 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| LAFNet-(w/ normal) | 0.451 | 0.547 | 0.155 | 0.004 | 0.386 | 0.001 | 0.420 | 0.730 | 0.083 | 0.437 | 0.296 | 0.136 | 0.246 | 0.105 | 0.594 | 0.804 | 0.906 | 0.833 | 0.426 | 0.695 | 0.000 | 0.113 | 0.489 | 0.510 | 0.035 | 0.103 | 0.842 | 0.659 | 0.000 | 0.401 | 0.936 | 0.539 | 0.006 | 0.666 | 0.920 | 0.110 | 0.846 | 0.656 | 0.000 | 0.625 | 0.941 | 0.904 | 0.948 | 0.000 | 0.035 | 0.000 | 0.092 | 0.206 | 0.425 | 0.888 | 0.365 | 0.029 | 0.000 | 0.224 | 0.494 | 0.000 | 0.629 | 0.323 | 0.041 | 0.450 | 1.000 | 0.218 | 0.885 | 0.636 | 0.113 | 0.888 | 0.022 | 0.022 | 0.200 | 0.529 | 0.311 | 0.693 | 0.963 | 0.766 | 0.855 | 0.222 | 0.909 | 0.885 | 0.300 | 0.403 | 0.778 | 0.888 | 0.951 | 0.647 | 0.586 |

| PTv3 - PointGroup | 0.387 | 0.598 | 0.164 | 0.000 | 0.714 | 0.000 | 0.243 | 0.660 | 0.088 | 0.487 | 0.075 | 0.187 | 0.283 | 0.267 | 0.435 | 0.857 | 0.867 | 0.833 | 0.000 | 0.562 | 0.000 | 0.047 | 0.175 | 0.375 | 0.000 | 0.200 | 0.815 | 0.437 | 0.000 | 0.525 | 0.895 | 0.289 | 0.014 | 0.523 | 0.887 | 0.360 | 0.795 | 0.000 | 0.000 | 0.777 | 0.886 | 0.000 | 0.878 | 0.000 | 0.107 | 0.000 | 0.166 | 0.382 | 0.303 | 0.839 | 0.450 | 0.104 | 0.000 | 0.389 | 0.214 | 0.000 | 0.666 | 0.301 | 0.100 | 0.257 | 0.902 | 0.215 | 0.166 | 0.666 | 0.017 | 0.800 | 0.444 | 0.000 | 0.000 | 0.714 | 0.428 | 0.571 | 0.720 | 0.416 | 0.873 | 0.342 | 0.833 | 0.800 | 0.185 | 0.221 | 0.661 | 0.767 | 0.782 | 0.000 | 0.506 |

| Xiaoyang Wu, Li Jiang, Peng-Shuai Wang, Zhijian Liu, Xihui Liu, Yu Qiao, Wanli Ouyang, Tong He, Hengshuang Zhao. Point Transformer V3: Simpler, Faster, Stronger. CVPR 2024 Oral | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| SoftGroup | 0.234 | 0.362 | 0.016 | 0.000 | 0.173 | 0.000 | 0.192 | 0.133 | 0.012 | 0.056 | 0.087 | 0.000 | 0.121 | 0.140 | 0.252 | 0.712 | 0.596 | 0.666 | 0.000 | 0.539 | 0.000 | 0.037 | 0.040 | 0.676 | 0.019 | 0.000 | 0.345 | 0.062 | 0.000 | 0.000 | 0.513 | 0.393 | 0.000 | 0.500 | 0.658 | 0.000 | 0.304 | 0.000 | 0.000 | 0.875 | 0.487 | 0.000 | 0.689 | 0.000 | 0.000 | 0.023 | 0.074 | 0.233 | 0.066 | 0.053 | 0.454 | 0.059 | 0.000 | 0.000 | 0.386 | 0.000 | 0.451 | 0.006 | 0.000 | 0.159 | 0.673 | 0.307 | 0.111 | 0.333 | 0.010 | 0.663 | 0.111 | 0.142 | 0.000 | 0.286 | 0.153 | 0.179 | 0.400 | 0.000 | 0.682 | 0.285 | 0.425 | 0.800 | 0.111 | 0.240 | 0.467 | 0.933 | 0.687 | 0.000 | 0.014 |

| Thang Vu, Kookhoi Kim, Tung M. Luu, Thanh Nguyen, Chang D. Yoo. SoftGroup for 3D Instance Segmentation on Point Clouds. CVPR 2022 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| PointGroup | 0.152 | 0.387 | 0.000 | 0.000 | 0.017 | 0.000 | 0.286 | 0.000 | 0.000 | 0.150 | 0.009 | 0.000 | 0.073 | 0.000 | 0.243 | 0.743 | 0.843 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.319 | 0.000 | 0.000 | 0.634 | 0.000 | 0.000 | 0.000 | 0.803 | 0.248 | 0.000 | 0.041 | 0.230 | 0.000 | 0.000 | 0.000 | 0.000 | 0.006 | 0.730 | 0.000 | 0.812 | 0.000 | 0.000 | 0.000 | 0.000 | 0.308 | 0.386 | 0.649 | 0.254 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.005 | 0.000 | 0.182 | 0.733 | 0.000 | 0.000 | 0.000 | 0.000 | 1.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.321 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.108 | 0.597 | 0.613 | 0.952 | 0.000 | 0.121 |

| Li Jiang, Hengshuang Zhao, Shaoshuai Shi, Shu Liu, Chi-Wing Fu, Jiaya Jia. PointGroup: Dual-Set Point Grouping for 3D Instance Segmentation. CVPR 2020 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Please refer to the submission instructions before making a submission

Submit results