3D Semantic Label Benchmark

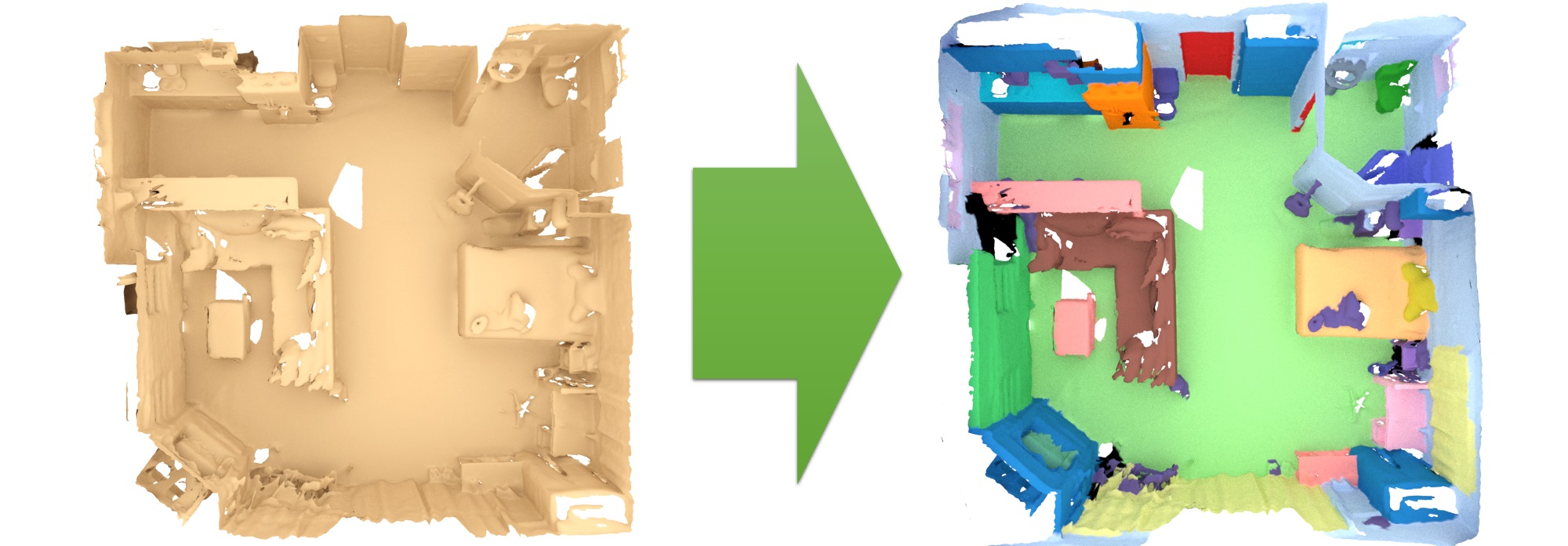

The 3D semantic labeling task involves predicting a semantic labeling of a 3D scan mesh.

Evaluation and metricsOur evaluation ranks all methods according to the PASCAL VOC intersection-over-union metric (IoU). IoU = TP/(TP+FP+FN), where TP, FP, and FN are the numbers of true positive, false positive, and false negative pixels, respectively. Predicted labels are evaluated per-vertex over the respective 3D scan mesh; for 3D approaches that operate on other representations like grids or points, the predicted labels should be mapped onto the mesh vertices (e.g., one such example for grid to mesh vertices is provided in the evaluation helpers).

This table lists the benchmark results for the 3D semantic label scenario.

| Method | Info | avg iou | bathtub | bed | bookshelf | cabinet | chair | counter | curtain | desk | door | floor | otherfurniture | picture | refrigerator | shower curtain | sink | sofa | table | toilet | wall | window |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PTv3-PPT-ALC | 0.798 1 | 0.911 11 | 0.812 23 | 0.854 8 | 0.770 12 | 0.856 15 | 0.555 17 | 0.943 1 | 0.660 26 | 0.735 2 | 0.979 1 | 0.606 7 | 0.492 1 | 0.792 4 | 0.934 4 | 0.841 2 | 0.819 6 | 0.716 9 | 0.947 10 | 0.906 1 | 0.822 1 | |

| Guangda Ji, Silvan Weder, Francis Engelmann, Marc Pollefeys, Hermann Blum: ARKit LabelMaker: A New Scale for Indoor 3D Scene Understanding. CVPR 2025 | ||||||||||||||||||||||

| PTv3 ScanNet | 0.794 3 | 0.941 3 | 0.813 22 | 0.851 11 | 0.782 7 | 0.890 2 | 0.597 1 | 0.916 6 | 0.696 11 | 0.713 5 | 0.979 1 | 0.635 1 | 0.384 3 | 0.793 3 | 0.907 10 | 0.821 5 | 0.790 37 | 0.696 14 | 0.967 4 | 0.903 3 | 0.805 2 | |

| Xiaoyang Wu, Li Jiang, Peng-Shuai Wang, Zhijian Liu, Xihui Liu, Yu Qiao, Wanli Ouyang, Tong He, Hengshuang Zhao: Point Transformer V3: Simpler, Faster, Stronger. CVPR 2024 (Oral) | ||||||||||||||||||||||

| DITR ScanNet | 0.797 2 | 0.727 77 | 0.869 1 | 0.882 1 | 0.785 6 | 0.868 7 | 0.578 5 | 0.943 1 | 0.744 1 | 0.727 3 | 0.979 1 | 0.627 2 | 0.364 9 | 0.824 1 | 0.949 2 | 0.779 15 | 0.844 1 | 0.757 1 | 0.982 1 | 0.905 2 | 0.802 3 | |

| Karim Abou Zeid, Kadir Yilmaz, Daan de Geus, Alexander Hermans, David Adrian, Timm Linder, Bastian Leibe: DINO in the Room: Leveraging 2D Foundation Models for 3D Segmentation. | ||||||||||||||||||||||

| Mix3D | 0.781 5 | 0.964 2 | 0.855 2 | 0.843 20 | 0.781 8 | 0.858 13 | 0.575 8 | 0.831 40 | 0.685 17 | 0.714 4 | 0.979 1 | 0.594 10 | 0.310 31 | 0.801 2 | 0.892 19 | 0.841 2 | 0.819 6 | 0.723 6 | 0.940 15 | 0.887 8 | 0.725 29 | |

| Alexey Nekrasov, Jonas Schult, Or Litany, Bastian Leibe, Francis Engelmann: Mix3D: Out-of-Context Data Augmentation for 3D Scenes. 3DV 2021 (Oral) | ||||||||||||||||||||||

| PonderV2 | 0.785 4 | 0.978 1 | 0.800 31 | 0.833 30 | 0.788 4 | 0.853 20 | 0.545 21 | 0.910 9 | 0.713 3 | 0.705 6 | 0.979 1 | 0.596 9 | 0.390 2 | 0.769 15 | 0.832 45 | 0.821 5 | 0.792 36 | 0.730 2 | 0.975 2 | 0.897 6 | 0.785 7 | |

| Haoyi Zhu, Honghui Yang, Xiaoyang Wu, Di Huang, Sha Zhang, Xianglong He, Tong He, Hengshuang Zhao, Chunhua Shen, Yu Qiao, Wanli Ouyang: PonderV2: Pave the Way for 3D Foundataion Model with A Universal Pre-training Paradigm. | ||||||||||||||||||||||

| IPCA | 0.731 37 | 0.890 18 | 0.837 4 | 0.864 4 | 0.726 37 | 0.873 5 | 0.530 31 | 0.824 44 | 0.489 94 | 0.647 25 | 0.978 6 | 0.609 5 | 0.336 20 | 0.624 57 | 0.733 64 | 0.758 23 | 0.776 44 | 0.570 72 | 0.949 9 | 0.877 17 | 0.728 25 | |

| OccuSeg+Semantic | 0.764 11 | 0.758 62 | 0.796 35 | 0.839 24 | 0.746 30 | 0.907 1 | 0.562 14 | 0.850 31 | 0.680 19 | 0.672 18 | 0.978 6 | 0.610 4 | 0.335 22 | 0.777 9 | 0.819 49 | 0.847 1 | 0.830 3 | 0.691 17 | 0.972 3 | 0.885 10 | 0.727 27 | |

| TTT-KD | 0.773 7 | 0.646 98 | 0.818 17 | 0.809 42 | 0.774 10 | 0.878 3 | 0.581 3 | 0.943 1 | 0.687 15 | 0.704 7 | 0.978 6 | 0.607 6 | 0.336 20 | 0.775 11 | 0.912 8 | 0.838 4 | 0.823 4 | 0.694 15 | 0.967 4 | 0.899 4 | 0.794 6 | |

| Lisa Weijler, Muhammad Jehanzeb Mirza, Leon Sick, Can Ekkazan, Pedro Hermosilla: TTT-KD: Test-Time Training for 3D Semantic Segmentation through Knowledge Distillation from Foundation Models. | ||||||||||||||||||||||

| PNE | 0.755 17 | 0.786 46 | 0.835 5 | 0.834 29 | 0.758 19 | 0.849 25 | 0.570 10 | 0.836 39 | 0.648 32 | 0.668 20 | 0.978 6 | 0.581 20 | 0.367 7 | 0.683 40 | 0.856 33 | 0.804 8 | 0.801 25 | 0.678 22 | 0.961 6 | 0.889 7 | 0.716 36 | |

| P. Hermosilla: Point Neighborhood Embeddings. | ||||||||||||||||||||||

| PointConvFormer | 0.749 22 | 0.793 44 | 0.790 40 | 0.807 44 | 0.750 28 | 0.856 15 | 0.524 32 | 0.881 18 | 0.588 59 | 0.642 31 | 0.977 10 | 0.591 12 | 0.274 53 | 0.781 7 | 0.929 5 | 0.804 8 | 0.796 30 | 0.642 40 | 0.947 10 | 0.885 10 | 0.715 37 | |

| Wenxuan Wu, Qi Shan, Li Fuxin: PointConvFormer: Revenge of the Point-based Convolution. | ||||||||||||||||||||||

| One-Thing-One-Click | 0.693 50 | 0.743 68 | 0.794 37 | 0.655 92 | 0.684 49 | 0.822 57 | 0.497 48 | 0.719 75 | 0.622 41 | 0.617 42 | 0.977 10 | 0.447 77 | 0.339 18 | 0.750 30 | 0.664 81 | 0.703 51 | 0.790 37 | 0.596 61 | 0.946 12 | 0.855 38 | 0.647 57 | |

| Zhengzhe Liu, Xiaojuan Qi, Chi-Wing Fu: One Thing One Click: A Self-Training Approach for Weakly Supervised 3D Semantic Segmentation. CVPR 2021 | ||||||||||||||||||||||

| One Thing One Click | 0.701 48 | 0.825 36 | 0.796 35 | 0.723 69 | 0.716 39 | 0.832 46 | 0.433 82 | 0.816 46 | 0.634 37 | 0.609 46 | 0.969 12 | 0.418 90 | 0.344 14 | 0.559 76 | 0.833 44 | 0.715 43 | 0.808 19 | 0.560 78 | 0.902 48 | 0.847 45 | 0.680 47 | |

| Swin3D | 0.779 6 | 0.861 24 | 0.818 17 | 0.836 27 | 0.790 3 | 0.875 4 | 0.576 7 | 0.905 10 | 0.704 7 | 0.739 1 | 0.969 12 | 0.611 3 | 0.349 12 | 0.756 25 | 0.958 1 | 0.702 52 | 0.805 20 | 0.708 10 | 0.916 39 | 0.898 5 | 0.801 4 | |

| ClickSeg_Semantic | 0.703 46 | 0.774 54 | 0.800 31 | 0.793 53 | 0.760 18 | 0.847 29 | 0.471 58 | 0.802 53 | 0.463 101 | 0.634 36 | 0.968 14 | 0.491 55 | 0.271 57 | 0.726 37 | 0.910 9 | 0.706 48 | 0.815 9 | 0.551 84 | 0.878 68 | 0.833 50 | 0.570 84 | |

| O3DSeg | 0.668 63 | 0.822 37 | 0.771 53 | 0.496 113 | 0.651 59 | 0.833 45 | 0.541 23 | 0.761 62 | 0.555 76 | 0.611 44 | 0.966 15 | 0.489 56 | 0.370 6 | 0.388 106 | 0.580 89 | 0.776 17 | 0.751 63 | 0.570 72 | 0.956 7 | 0.817 61 | 0.646 58 | |

| PPT-SpUNet-Joint | 0.766 9 | 0.932 5 | 0.794 37 | 0.829 32 | 0.751 26 | 0.854 18 | 0.540 25 | 0.903 11 | 0.630 39 | 0.672 18 | 0.963 16 | 0.565 26 | 0.357 10 | 0.788 5 | 0.900 14 | 0.737 31 | 0.802 21 | 0.685 20 | 0.950 8 | 0.887 8 | 0.780 8 | |

| Xiaoyang Wu, Zhuotao Tian, Xin Wen, Bohao Peng, Xihui Liu, Kaicheng Yu, Hengshuang Zhao: Towards Large-scale 3D Representation Learning with Multi-dataset Point Prompt Training. CVPR 2024 | ||||||||||||||||||||||

| OA-CNN-L_ScanNet20 | 0.756 16 | 0.783 48 | 0.826 6 | 0.858 6 | 0.776 9 | 0.837 40 | 0.548 20 | 0.896 15 | 0.649 31 | 0.675 16 | 0.962 17 | 0.586 17 | 0.335 22 | 0.771 14 | 0.802 54 | 0.770 19 | 0.787 39 | 0.691 17 | 0.936 20 | 0.880 13 | 0.761 14 | |

| ResLFE_HDS | 0.772 8 | 0.939 4 | 0.824 7 | 0.854 8 | 0.771 11 | 0.840 35 | 0.564 13 | 0.900 12 | 0.686 16 | 0.677 14 | 0.961 18 | 0.537 36 | 0.348 13 | 0.769 15 | 0.903 12 | 0.785 13 | 0.815 9 | 0.676 26 | 0.939 16 | 0.880 13 | 0.772 11 | |

| DMF-Net | 0.752 20 | 0.906 15 | 0.793 39 | 0.802 48 | 0.689 47 | 0.825 53 | 0.556 16 | 0.867 24 | 0.681 18 | 0.602 51 | 0.960 19 | 0.555 32 | 0.365 8 | 0.779 8 | 0.859 30 | 0.747 27 | 0.795 33 | 0.717 8 | 0.917 38 | 0.856 36 | 0.764 13 | |

| C.Yang, Y.Yan, W.Zhao, J.Ye, X.Yang, A.Hussain, B.Dong, K.Huang: Towards Deeper and Better Multi-view Feature Fusion for 3D Semantic Segmentation. ICONIP 2023 | ||||||||||||||||||||||

| PointTransformerV2 | 0.752 20 | 0.742 69 | 0.809 26 | 0.872 2 | 0.758 19 | 0.860 12 | 0.552 18 | 0.891 17 | 0.610 46 | 0.687 8 | 0.960 19 | 0.559 30 | 0.304 34 | 0.766 18 | 0.926 6 | 0.767 20 | 0.797 29 | 0.644 39 | 0.942 13 | 0.876 20 | 0.722 32 | |

| Xiaoyang Wu, Yixing Lao, Li Jiang, Xihui Liu, Hengshuang Zhao: Point Transformer V2: Grouped Vector Attention and Partition-based Pooling. NeurIPS 2022 | ||||||||||||||||||||||

| DiffSeg3D2 | 0.745 28 | 0.725 79 | 0.814 21 | 0.837 25 | 0.751 26 | 0.831 47 | 0.514 37 | 0.896 15 | 0.674 20 | 0.684 11 | 0.960 19 | 0.564 27 | 0.303 35 | 0.773 12 | 0.820 48 | 0.713 45 | 0.798 28 | 0.690 19 | 0.923 31 | 0.875 21 | 0.757 15 | |

| OctFormer | 0.766 9 | 0.925 7 | 0.808 27 | 0.849 13 | 0.786 5 | 0.846 30 | 0.566 12 | 0.876 19 | 0.690 13 | 0.674 17 | 0.960 19 | 0.576 22 | 0.226 74 | 0.753 27 | 0.904 11 | 0.777 16 | 0.815 9 | 0.722 7 | 0.923 31 | 0.877 17 | 0.776 10 | |

| Peng-Shuai Wang: OctFormer: Octree-based Transformers for 3D Point Clouds. SIGGRAPH 2023 | ||||||||||||||||||||||

| MS-SFA-net | 0.730 38 | 0.910 12 | 0.819 14 | 0.837 25 | 0.698 44 | 0.838 38 | 0.532 29 | 0.872 21 | 0.605 50 | 0.676 15 | 0.959 23 | 0.535 39 | 0.341 17 | 0.649 46 | 0.598 88 | 0.708 47 | 0.810 16 | 0.664 35 | 0.895 54 | 0.879 16 | 0.771 12 | |

| O-CNN | 0.762 13 | 0.924 8 | 0.823 8 | 0.844 19 | 0.770 12 | 0.852 22 | 0.577 6 | 0.847 34 | 0.711 4 | 0.640 32 | 0.958 24 | 0.592 11 | 0.217 80 | 0.762 20 | 0.888 20 | 0.758 23 | 0.813 13 | 0.726 4 | 0.932 25 | 0.868 27 | 0.744 19 | |

| Peng-Shuai Wang, Yang Liu, Yu-Xiao Guo, Chun-Yu Sun, Xin Tong: O-CNN: Octree-based Convolutional Neural Networks for 3D Shape Analysis. SIGGRAPH 2017 | ||||||||||||||||||||||

| Retro-FPN | 0.744 29 | 0.842 31 | 0.800 31 | 0.767 62 | 0.740 32 | 0.836 42 | 0.541 23 | 0.914 7 | 0.672 22 | 0.626 38 | 0.958 24 | 0.552 33 | 0.272 55 | 0.777 9 | 0.886 22 | 0.696 53 | 0.801 25 | 0.674 29 | 0.941 14 | 0.858 34 | 0.717 34 | |

| Peng Xiang*, Xin Wen*, Yu-Shen Liu, Hui Zhang, Yi Fang, Zhizhong Han: Retrospective Feature Pyramid Network for Point Cloud Semantic Segmentation. ICCV 2023 | ||||||||||||||||||||||

| ConDaFormer | 0.755 17 | 0.927 6 | 0.822 10 | 0.836 27 | 0.801 1 | 0.849 25 | 0.516 36 | 0.864 28 | 0.651 30 | 0.680 13 | 0.958 24 | 0.584 19 | 0.282 47 | 0.759 23 | 0.855 35 | 0.728 34 | 0.802 21 | 0.678 22 | 0.880 67 | 0.873 24 | 0.756 17 | |

| Lunhao Duan, Shanshan Zhao, Nan Xue, Mingming Gong, Guisong Xia, Dacheng Tao: ConDaFormer : Disassembled Transformer with Local Structure Enhancement for 3D Point Cloud Understanding. Neurips, 2023 | ||||||||||||||||||||||

| CU-Hybrid Net | 0.764 11 | 0.924 8 | 0.819 14 | 0.840 23 | 0.757 21 | 0.853 20 | 0.580 4 | 0.848 32 | 0.709 5 | 0.643 28 | 0.958 24 | 0.587 16 | 0.295 39 | 0.753 27 | 0.884 23 | 0.758 23 | 0.815 9 | 0.725 5 | 0.927 27 | 0.867 28 | 0.743 20 | |

| DiffSegNet | 0.758 14 | 0.725 79 | 0.789 42 | 0.843 20 | 0.762 17 | 0.856 15 | 0.562 14 | 0.920 4 | 0.657 29 | 0.658 22 | 0.958 24 | 0.589 14 | 0.337 19 | 0.782 6 | 0.879 24 | 0.787 11 | 0.779 42 | 0.678 22 | 0.926 29 | 0.880 13 | 0.799 5 | |

| online3d | 0.727 39 | 0.715 84 | 0.777 49 | 0.854 8 | 0.748 29 | 0.858 13 | 0.497 48 | 0.872 21 | 0.572 67 | 0.639 33 | 0.957 29 | 0.523 44 | 0.297 38 | 0.750 30 | 0.803 53 | 0.744 28 | 0.810 16 | 0.587 68 | 0.938 18 | 0.871 26 | 0.719 33 | |

| BPNet | 0.749 22 | 0.909 13 | 0.818 17 | 0.811 40 | 0.752 24 | 0.839 37 | 0.485 54 | 0.842 36 | 0.673 21 | 0.644 27 | 0.957 29 | 0.528 43 | 0.305 33 | 0.773 12 | 0.859 30 | 0.788 10 | 0.818 8 | 0.693 16 | 0.916 39 | 0.856 36 | 0.723 31 | |

| Wenbo Hu, Hengshuang Zhao, Li Jiang, Jiaya Jia, Tien-Tsin Wong: Bidirectional Projection Network for Cross Dimension Scene Understanding. CVPR 2021 (Oral) | ||||||||||||||||||||||

| LSK3DNet | 0.755 17 | 0.899 17 | 0.823 8 | 0.843 20 | 0.764 16 | 0.838 38 | 0.584 2 | 0.845 35 | 0.717 2 | 0.638 34 | 0.956 31 | 0.580 21 | 0.229 73 | 0.640 50 | 0.900 14 | 0.750 26 | 0.813 13 | 0.729 3 | 0.920 35 | 0.872 25 | 0.757 15 | |

| Tuo Feng, Wenguan Wang, Fan Ma, Yi Yang: LSK3DNet: Towards Effective and Efficient 3D Perception with Large Sparse Kernels. CVPR 2024 | ||||||||||||||||||||||

| VMNet | 0.746 26 | 0.870 22 | 0.838 3 | 0.858 6 | 0.729 36 | 0.850 24 | 0.501 43 | 0.874 20 | 0.587 60 | 0.658 22 | 0.956 31 | 0.564 27 | 0.299 36 | 0.765 19 | 0.900 14 | 0.716 42 | 0.812 15 | 0.631 45 | 0.939 16 | 0.858 34 | 0.709 38 | |

| Zeyu HU, Xuyang Bai, Jiaxiang Shang, Runze Zhang, Jiayu Dong, Xin Wang, Guangyuan Sun, Hongbo Fu, Chiew-Lan Tai: VMNet: Voxel-Mesh Network for Geodesic-Aware 3D Semantic Segmentation. ICCV 2021 (Oral) | ||||||||||||||||||||||

| MSP | 0.748 24 | 0.623 101 | 0.804 29 | 0.859 5 | 0.745 31 | 0.824 55 | 0.501 43 | 0.912 8 | 0.690 13 | 0.685 10 | 0.956 31 | 0.567 25 | 0.320 28 | 0.768 17 | 0.918 7 | 0.720 39 | 0.802 21 | 0.676 26 | 0.921 33 | 0.881 12 | 0.779 9 | |

| StratifiedFormer | 0.747 25 | 0.901 16 | 0.803 30 | 0.845 18 | 0.757 21 | 0.846 30 | 0.512 38 | 0.825 43 | 0.696 11 | 0.645 26 | 0.956 31 | 0.576 22 | 0.262 64 | 0.744 33 | 0.861 29 | 0.742 29 | 0.770 49 | 0.705 11 | 0.899 51 | 0.860 33 | 0.734 22 | |

| Xin Lai*, Jianhui Liu*, Li Jiang, Liwei Wang, Hengshuang Zhao, Shu Liu, Xiaojuan Qi, Jiaya Jia: Stratified Transformer for 3D Point Cloud Segmentation. CVPR 2022 | ||||||||||||||||||||||

| SAT | 0.742 32 | 0.860 25 | 0.765 56 | 0.819 35 | 0.769 14 | 0.848 27 | 0.533 27 | 0.829 41 | 0.663 24 | 0.631 37 | 0.955 35 | 0.586 17 | 0.274 53 | 0.753 27 | 0.896 17 | 0.729 33 | 0.760 57 | 0.666 33 | 0.921 33 | 0.855 38 | 0.733 23 | |

| PointTransformer++ | 0.725 40 | 0.727 77 | 0.811 25 | 0.819 35 | 0.765 15 | 0.841 34 | 0.502 42 | 0.814 49 | 0.621 42 | 0.623 40 | 0.955 35 | 0.556 31 | 0.284 46 | 0.620 59 | 0.866 27 | 0.781 14 | 0.757 61 | 0.648 37 | 0.932 25 | 0.862 31 | 0.709 38 | |

| EQ-Net | 0.743 31 | 0.620 102 | 0.799 34 | 0.849 13 | 0.730 35 | 0.822 57 | 0.493 51 | 0.897 14 | 0.664 23 | 0.681 12 | 0.955 35 | 0.562 29 | 0.378 4 | 0.760 21 | 0.903 12 | 0.738 30 | 0.801 25 | 0.673 30 | 0.907 43 | 0.877 17 | 0.745 18 | |

| Zetong Yang*, Li Jiang*, Yanan Sun, Bernt Schiele, Jiaya JIa: A Unified Query-based Paradigm for Point Cloud Understanding. CVPR 2022 | ||||||||||||||||||||||

| RPN | 0.736 35 | 0.776 52 | 0.790 40 | 0.851 11 | 0.754 23 | 0.854 18 | 0.491 53 | 0.866 26 | 0.596 57 | 0.686 9 | 0.955 35 | 0.536 37 | 0.342 16 | 0.624 57 | 0.869 26 | 0.787 11 | 0.802 21 | 0.628 46 | 0.927 27 | 0.875 21 | 0.704 40 | |

| SparseConvNet | 0.725 40 | 0.647 97 | 0.821 11 | 0.846 17 | 0.721 38 | 0.869 6 | 0.533 27 | 0.754 65 | 0.603 53 | 0.614 43 | 0.955 35 | 0.572 24 | 0.325 26 | 0.710 39 | 0.870 25 | 0.724 37 | 0.823 4 | 0.628 46 | 0.934 22 | 0.865 30 | 0.683 46 | |

| LargeKernel3D | 0.739 34 | 0.909 13 | 0.820 12 | 0.806 46 | 0.740 32 | 0.852 22 | 0.545 21 | 0.826 42 | 0.594 58 | 0.643 28 | 0.955 35 | 0.541 35 | 0.263 63 | 0.723 38 | 0.858 32 | 0.775 18 | 0.767 50 | 0.678 22 | 0.933 23 | 0.848 44 | 0.694 43 | |

| Yukang Chen*, Jianhui Liu*, Xiangyu Zhang, Xiaojuan Qi, Jiaya Jia: LargeKernel3D: Scaling up Kernels in 3D Sparse CNNs. CVPR 2023 | ||||||||||||||||||||||

| MatchingNet | 0.724 42 | 0.812 41 | 0.812 23 | 0.810 41 | 0.735 34 | 0.834 44 | 0.495 50 | 0.860 29 | 0.572 67 | 0.602 51 | 0.954 41 | 0.512 49 | 0.280 49 | 0.757 24 | 0.845 41 | 0.725 36 | 0.780 41 | 0.606 56 | 0.937 19 | 0.851 43 | 0.700 42 | |

| VI-PointConv | 0.676 60 | 0.770 58 | 0.754 63 | 0.783 57 | 0.621 68 | 0.814 67 | 0.552 18 | 0.758 63 | 0.571 70 | 0.557 63 | 0.954 41 | 0.529 42 | 0.268 61 | 0.530 85 | 0.682 75 | 0.675 62 | 0.719 75 | 0.603 58 | 0.888 60 | 0.833 50 | 0.665 51 | |

| Xingyi Li, Wenxuan Wu, Xiaoli Z. Fern, Li Fuxin: The Devils in the Point Clouds: Studying the Robustness of Point Cloud Convolutions. | ||||||||||||||||||||||

| LRPNet | 0.742 32 | 0.816 39 | 0.806 28 | 0.807 44 | 0.752 24 | 0.828 51 | 0.575 8 | 0.839 38 | 0.699 9 | 0.637 35 | 0.954 41 | 0.520 47 | 0.320 28 | 0.755 26 | 0.834 43 | 0.760 22 | 0.772 46 | 0.676 26 | 0.915 41 | 0.862 31 | 0.717 34 | |

| DTC | 0.757 15 | 0.843 30 | 0.820 12 | 0.847 16 | 0.791 2 | 0.862 11 | 0.511 39 | 0.870 23 | 0.707 6 | 0.652 24 | 0.954 41 | 0.604 8 | 0.279 50 | 0.760 21 | 0.942 3 | 0.734 32 | 0.766 51 | 0.701 13 | 0.884 62 | 0.874 23 | 0.736 21 | |

| PointMetaBase | 0.714 44 | 0.835 32 | 0.785 44 | 0.821 33 | 0.684 49 | 0.846 30 | 0.531 30 | 0.865 27 | 0.614 43 | 0.596 55 | 0.953 45 | 0.500 52 | 0.246 69 | 0.674 41 | 0.888 20 | 0.692 54 | 0.764 53 | 0.624 48 | 0.849 89 | 0.844 49 | 0.675 48 | |

| PointConv | 0.666 64 | 0.781 49 | 0.759 59 | 0.699 77 | 0.644 63 | 0.822 57 | 0.475 56 | 0.779 57 | 0.564 73 | 0.504 84 | 0.953 45 | 0.428 84 | 0.203 88 | 0.586 67 | 0.754 60 | 0.661 68 | 0.753 62 | 0.588 67 | 0.902 48 | 0.813 64 | 0.642 59 | |

| Wenxuan Wu, Zhongang Qi, Li Fuxin: PointConv: Deep Convolutional Networks on 3D Point Clouds. CVPR 2019 | ||||||||||||||||||||||

| PointMRNet | 0.640 74 | 0.717 83 | 0.701 86 | 0.692 80 | 0.576 83 | 0.801 76 | 0.467 62 | 0.716 76 | 0.563 74 | 0.459 94 | 0.953 45 | 0.429 83 | 0.169 101 | 0.581 68 | 0.854 36 | 0.605 88 | 0.710 77 | 0.550 85 | 0.894 56 | 0.793 78 | 0.575 82 | |

| Weakly-Openseg v3 | 0.625 88 | 0.924 8 | 0.787 43 | 0.620 101 | 0.555 91 | 0.811 71 | 0.393 94 | 0.666 89 | 0.382 112 | 0.520 78 | 0.953 45 | 0.250 116 | 0.208 83 | 0.604 62 | 0.670 77 | 0.644 79 | 0.742 67 | 0.538 93 | 0.919 36 | 0.803 69 | 0.513 102 | |

| INS-Conv-semantic | 0.717 43 | 0.751 65 | 0.759 59 | 0.812 39 | 0.704 42 | 0.868 7 | 0.537 26 | 0.842 36 | 0.609 48 | 0.608 47 | 0.953 45 | 0.534 40 | 0.293 40 | 0.616 60 | 0.864 28 | 0.719 41 | 0.793 34 | 0.640 41 | 0.933 23 | 0.845 48 | 0.663 52 | |

| PicassoNet-II | 0.692 51 | 0.732 73 | 0.772 51 | 0.786 54 | 0.677 51 | 0.866 9 | 0.517 35 | 0.848 32 | 0.509 87 | 0.626 38 | 0.952 50 | 0.536 37 | 0.225 76 | 0.545 82 | 0.704 71 | 0.689 59 | 0.810 16 | 0.564 77 | 0.903 47 | 0.854 40 | 0.729 24 | |

| Huan Lei, Naveed Akhtar, Mubarak Shah, and Ajmal Mian: Geometric feature learning for 3D meshes. | ||||||||||||||||||||||

| APCF-Net | 0.631 82 | 0.742 69 | 0.687 98 | 0.672 85 | 0.557 89 | 0.792 83 | 0.408 87 | 0.665 90 | 0.545 77 | 0.508 81 | 0.952 50 | 0.428 84 | 0.186 95 | 0.634 53 | 0.702 72 | 0.620 84 | 0.706 81 | 0.555 82 | 0.873 75 | 0.798 73 | 0.581 80 | |

| Haojia, Lin: Adaptive Pyramid Context Fusion for Point Cloud Perception. GRSL | ||||||||||||||||||||||

| SConv | 0.636 78 | 0.830 34 | 0.697 89 | 0.752 67 | 0.572 85 | 0.780 88 | 0.445 74 | 0.716 76 | 0.529 80 | 0.530 72 | 0.951 52 | 0.446 78 | 0.170 100 | 0.507 91 | 0.666 80 | 0.636 81 | 0.682 90 | 0.541 91 | 0.886 61 | 0.799 71 | 0.594 78 | |

| PointASNL | 0.666 64 | 0.703 87 | 0.781 47 | 0.751 68 | 0.655 56 | 0.830 48 | 0.471 58 | 0.769 60 | 0.474 97 | 0.537 69 | 0.951 52 | 0.475 62 | 0.279 50 | 0.635 52 | 0.698 74 | 0.675 62 | 0.751 63 | 0.553 83 | 0.816 96 | 0.806 66 | 0.703 41 | |

| Xu Yan, Chaoda Zheng, Zhen Li, Sheng Wang, Shuguang Cui: PointASNL: Robust Point Clouds Processing using Nonlocal Neural Networks with Adaptive Sampling. CVPR 2020 | ||||||||||||||||||||||

| joint point-based | 0.634 80 | 0.614 103 | 0.778 48 | 0.667 89 | 0.633 67 | 0.825 53 | 0.420 85 | 0.804 51 | 0.467 99 | 0.561 62 | 0.951 52 | 0.494 53 | 0.291 42 | 0.566 73 | 0.458 101 | 0.579 98 | 0.764 53 | 0.559 80 | 0.838 91 | 0.814 62 | 0.598 76 | |

| Hung-Yueh Chiang, Yen-Liang Lin, Yueh-Cheng Liu, Winston H. Hsu: A Unified Point-Based Framework for 3D Segmentation. 3DV 2019 | ||||||||||||||||||||||

| MinkowskiNet | 0.736 35 | 0.859 26 | 0.818 17 | 0.832 31 | 0.709 41 | 0.840 35 | 0.521 34 | 0.853 30 | 0.660 26 | 0.643 28 | 0.951 52 | 0.544 34 | 0.286 45 | 0.731 36 | 0.893 18 | 0.675 62 | 0.772 46 | 0.683 21 | 0.874 74 | 0.852 42 | 0.727 27 | |

| C. Choy, J. Gwak, S. Savarese: 4D Spatio-Temporal ConvNets: Minkowski Convolutional Neural Networks. CVPR 2019 | ||||||||||||||||||||||

| DGNet | 0.684 55 | 0.712 85 | 0.784 45 | 0.782 58 | 0.658 54 | 0.835 43 | 0.499 47 | 0.823 45 | 0.641 34 | 0.597 54 | 0.950 56 | 0.487 57 | 0.281 48 | 0.575 70 | 0.619 85 | 0.647 75 | 0.764 53 | 0.620 51 | 0.871 80 | 0.846 47 | 0.688 45 | |

| FusionNet | 0.688 53 | 0.704 86 | 0.741 75 | 0.754 66 | 0.656 55 | 0.829 49 | 0.501 43 | 0.741 70 | 0.609 48 | 0.548 65 | 0.950 56 | 0.522 46 | 0.371 5 | 0.633 54 | 0.756 59 | 0.715 43 | 0.771 48 | 0.623 49 | 0.861 85 | 0.814 62 | 0.658 53 | |

| Feihu Zhang, Jin Fang, Benjamin Wah, Philip Torr: Deep FusionNet for Point Cloud Semantic Segmentation. ECCV 2020 | ||||||||||||||||||||||

| PPCNN++ | 0.663 66 | 0.746 66 | 0.708 82 | 0.722 70 | 0.638 65 | 0.820 60 | 0.451 67 | 0.566 103 | 0.599 55 | 0.541 67 | 0.950 56 | 0.510 50 | 0.313 30 | 0.648 48 | 0.819 49 | 0.616 87 | 0.682 90 | 0.590 65 | 0.869 81 | 0.810 65 | 0.656 54 | |

| Pyunghwan Ahn, Juyoung Yang, Eojindl Yi, Chanho Lee, Junmo Kim: Projection-based Point Convolution for Efficient Point Cloud Segmentation. IEEE Access | ||||||||||||||||||||||

| ROSMRF3D | 0.673 61 | 0.789 45 | 0.748 68 | 0.763 64 | 0.635 66 | 0.814 67 | 0.407 89 | 0.747 67 | 0.581 64 | 0.573 60 | 0.950 56 | 0.484 58 | 0.271 57 | 0.607 61 | 0.754 60 | 0.649 72 | 0.774 45 | 0.596 61 | 0.883 63 | 0.823 56 | 0.606 71 | |

| SIConv | 0.625 88 | 0.830 34 | 0.694 91 | 0.757 65 | 0.563 87 | 0.772 92 | 0.448 71 | 0.647 93 | 0.520 83 | 0.509 80 | 0.949 60 | 0.431 82 | 0.191 93 | 0.496 93 | 0.614 86 | 0.647 75 | 0.672 94 | 0.535 95 | 0.876 70 | 0.783 87 | 0.571 83 | |

| PointMTL | 0.632 81 | 0.731 74 | 0.688 96 | 0.675 84 | 0.591 76 | 0.784 85 | 0.444 77 | 0.565 104 | 0.610 46 | 0.492 85 | 0.949 60 | 0.456 70 | 0.254 66 | 0.587 65 | 0.706 70 | 0.599 91 | 0.665 96 | 0.612 55 | 0.868 82 | 0.791 84 | 0.579 81 | |

| ODIN | 0.744 29 | 0.658 94 | 0.752 65 | 0.870 3 | 0.714 40 | 0.843 33 | 0.569 11 | 0.919 5 | 0.703 8 | 0.622 41 | 0.949 60 | 0.591 12 | 0.343 15 | 0.736 34 | 0.784 56 | 0.816 7 | 0.838 2 | 0.672 31 | 0.918 37 | 0.854 40 | 0.725 29 | |

| Ayush Jain, Pushkal Katara, Nikolaos Gkanatsios, Adam W. Harley, Gabriel Sarch, Kriti Aggarwal, Vishrav Chaudhary, Katerina Fragkiadaki: ODIN: A Single Model for 2D and 3D Segmentation. CVPR 2024 | ||||||||||||||||||||||

| Virtual MVFusion | 0.746 26 | 0.771 56 | 0.819 14 | 0.848 15 | 0.702 43 | 0.865 10 | 0.397 92 | 0.899 13 | 0.699 9 | 0.664 21 | 0.948 63 | 0.588 15 | 0.330 24 | 0.746 32 | 0.851 39 | 0.764 21 | 0.796 30 | 0.704 12 | 0.935 21 | 0.866 29 | 0.728 25 | |

| Abhijit Kundu, Xiaoqi Yin, Alireza Fathi, David Ross, Brian Brewington, Thomas Funkhouser, Caroline Pantofaru: Virtual Multi-view Fusion for 3D Semantic Segmentation. ECCV 2020 | ||||||||||||||||||||||

| FPConv | 0.639 75 | 0.785 47 | 0.760 58 | 0.713 75 | 0.603 72 | 0.798 78 | 0.392 95 | 0.534 108 | 0.603 53 | 0.524 75 | 0.948 63 | 0.457 69 | 0.250 67 | 0.538 83 | 0.723 67 | 0.598 92 | 0.696 85 | 0.614 52 | 0.872 77 | 0.799 71 | 0.567 87 | |

| Yiqun Lin, Zizheng Yan, Haibin Huang, Dong Du, Ligang Liu, Shuguang Cui, Xiaoguang Han: FPConv: Learning Local Flattening for Point Convolution. CVPR 2020 | ||||||||||||||||||||||

| AttAN | 0.609 93 | 0.760 61 | 0.667 100 | 0.649 95 | 0.521 96 | 0.793 81 | 0.457 65 | 0.648 92 | 0.528 81 | 0.434 101 | 0.947 65 | 0.401 94 | 0.153 107 | 0.454 99 | 0.721 68 | 0.648 74 | 0.717 76 | 0.536 94 | 0.904 45 | 0.765 94 | 0.485 106 | |

| Gege Zhang, Qinghua Ma, Licheng Jiao, Fang Liu and Qigong Sun: AttAN: Attention Adversarial Networks for 3D Point Cloud Semantic Segmentation. IJCAI2020 | ||||||||||||||||||||||

| PD-Net | 0.638 76 | 0.797 43 | 0.769 55 | 0.641 99 | 0.590 77 | 0.820 60 | 0.461 64 | 0.537 107 | 0.637 36 | 0.536 70 | 0.947 65 | 0.388 97 | 0.206 85 | 0.656 44 | 0.668 79 | 0.647 75 | 0.732 72 | 0.585 69 | 0.868 82 | 0.793 78 | 0.473 110 | |

| RFCR | 0.702 47 | 0.889 19 | 0.745 71 | 0.813 38 | 0.672 52 | 0.818 64 | 0.493 51 | 0.815 48 | 0.623 40 | 0.610 45 | 0.947 65 | 0.470 64 | 0.249 68 | 0.594 64 | 0.848 40 | 0.705 49 | 0.779 42 | 0.646 38 | 0.892 57 | 0.823 56 | 0.611 67 | |

| Jingyu Gong, Jiachen Xu, Xin Tan, Haichuan Song, Yanyun Qu, Yuan Xie, Lizhuang Ma: Omni-Supervised Point Cloud Segmentation via Gradual Receptive Field Component Reasoning. CVPR2021 | ||||||||||||||||||||||

| Pointnet++ & Feature | 0.557 103 | 0.735 71 | 0.661 102 | 0.686 81 | 0.491 100 | 0.744 100 | 0.392 95 | 0.539 106 | 0.451 103 | 0.375 107 | 0.946 68 | 0.376 99 | 0.205 86 | 0.403 105 | 0.356 109 | 0.553 101 | 0.643 102 | 0.497 101 | 0.824 95 | 0.756 97 | 0.515 100 | |

| HPEIN | 0.618 91 | 0.729 75 | 0.668 99 | 0.647 96 | 0.597 75 | 0.766 93 | 0.414 86 | 0.680 84 | 0.520 83 | 0.525 74 | 0.946 68 | 0.432 80 | 0.215 81 | 0.493 94 | 0.599 87 | 0.638 80 | 0.617 106 | 0.570 72 | 0.897 52 | 0.806 66 | 0.605 73 | |

| Li Jiang, Hengshuang Zhao, Shu Liu, Xiaoyong Shen, Chi-Wing Fu, Jiaya Jia: Hierarchical Point-Edge Interaction Network for Point Cloud Semantic Segmentation. ICCV 2019 | ||||||||||||||||||||||

| Superpoint Network | 0.683 58 | 0.851 28 | 0.728 79 | 0.800 50 | 0.653 57 | 0.806 73 | 0.468 60 | 0.804 51 | 0.572 67 | 0.602 51 | 0.946 68 | 0.453 74 | 0.239 72 | 0.519 87 | 0.822 46 | 0.689 59 | 0.762 56 | 0.595 63 | 0.895 54 | 0.827 54 | 0.630 64 | |

| HPGCNN | 0.656 69 | 0.698 89 | 0.743 73 | 0.650 94 | 0.564 86 | 0.820 60 | 0.505 41 | 0.758 63 | 0.631 38 | 0.479 88 | 0.945 71 | 0.480 60 | 0.226 74 | 0.572 71 | 0.774 58 | 0.690 57 | 0.735 70 | 0.614 52 | 0.853 88 | 0.776 91 | 0.597 77 | |

| Jisheng Dang, Qingyong Hu, Yulan Guo, Jun Yang: HPGCNN. | ||||||||||||||||||||||

| Feature_GeometricNet | 0.690 52 | 0.884 20 | 0.754 63 | 0.795 51 | 0.647 60 | 0.818 64 | 0.422 84 | 0.802 53 | 0.612 45 | 0.604 49 | 0.945 71 | 0.462 67 | 0.189 94 | 0.563 75 | 0.853 37 | 0.726 35 | 0.765 52 | 0.632 44 | 0.904 45 | 0.821 59 | 0.606 71 | |

| Kangcheng Liu, Ben M. Chen: https://arxiv.org/abs/2012.09439. arXiv Preprint | ||||||||||||||||||||||

| RandLA-Net | 0.645 71 | 0.778 50 | 0.731 78 | 0.699 77 | 0.577 82 | 0.829 49 | 0.446 72 | 0.736 71 | 0.477 96 | 0.523 77 | 0.945 71 | 0.454 71 | 0.269 59 | 0.484 96 | 0.749 63 | 0.618 85 | 0.738 68 | 0.599 60 | 0.827 93 | 0.792 81 | 0.621 66 | |

| contrastBoundary | 0.705 45 | 0.769 59 | 0.775 50 | 0.809 42 | 0.687 48 | 0.820 60 | 0.439 80 | 0.812 50 | 0.661 25 | 0.591 57 | 0.945 71 | 0.515 48 | 0.171 99 | 0.633 54 | 0.856 33 | 0.720 39 | 0.796 30 | 0.668 32 | 0.889 59 | 0.847 45 | 0.689 44 | |

| Liyao Tang, Yibing Zhan, Zhe Chen, Baosheng Yu, Dacheng Tao: Contrastive Boundary Learning for Point Cloud Segmentation. CVPR2022 | ||||||||||||||||||||||

| Feature-Geometry Net | 0.685 54 | 0.866 23 | 0.748 68 | 0.819 35 | 0.645 62 | 0.794 80 | 0.450 70 | 0.802 53 | 0.587 60 | 0.604 49 | 0.945 71 | 0.464 66 | 0.201 89 | 0.554 78 | 0.840 42 | 0.723 38 | 0.732 72 | 0.602 59 | 0.907 43 | 0.822 58 | 0.603 74 | |

| VACNN++ | 0.684 55 | 0.728 76 | 0.757 62 | 0.776 59 | 0.690 45 | 0.804 75 | 0.464 63 | 0.816 46 | 0.577 66 | 0.587 58 | 0.945 71 | 0.508 51 | 0.276 52 | 0.671 42 | 0.710 69 | 0.663 67 | 0.750 65 | 0.589 66 | 0.881 65 | 0.832 52 | 0.653 55 | |

| PointCNN with RGB | 0.458 111 | 0.577 107 | 0.611 111 | 0.356 122 | 0.321 118 | 0.715 104 | 0.299 111 | 0.376 115 | 0.328 118 | 0.319 112 | 0.944 77 | 0.285 111 | 0.164 103 | 0.216 119 | 0.229 114 | 0.484 107 | 0.545 113 | 0.456 109 | 0.755 102 | 0.709 107 | 0.475 109 | |

| Yangyan Li, Rui Bu, Mingchao Sun, Baoquan Chen: PointCNN. NeurIPS 2018 | ||||||||||||||||||||||

| dtc_net | 0.625 88 | 0.703 87 | 0.751 66 | 0.794 52 | 0.535 93 | 0.848 27 | 0.480 55 | 0.676 87 | 0.528 81 | 0.469 91 | 0.944 77 | 0.454 71 | 0.004 121 | 0.464 98 | 0.636 83 | 0.704 50 | 0.758 59 | 0.548 87 | 0.924 30 | 0.787 85 | 0.492 104 | |

| PointNet2-SFPN | 0.631 82 | 0.771 56 | 0.692 93 | 0.672 85 | 0.524 95 | 0.837 40 | 0.440 79 | 0.706 81 | 0.538 78 | 0.446 96 | 0.944 77 | 0.421 89 | 0.219 79 | 0.552 79 | 0.751 62 | 0.591 94 | 0.737 69 | 0.543 90 | 0.901 50 | 0.768 93 | 0.557 91 | |

| PointConv-SFPN | 0.641 72 | 0.776 52 | 0.703 84 | 0.721 71 | 0.557 89 | 0.826 52 | 0.451 67 | 0.672 88 | 0.563 74 | 0.483 87 | 0.943 80 | 0.425 87 | 0.162 104 | 0.644 49 | 0.726 65 | 0.659 69 | 0.709 79 | 0.572 71 | 0.875 71 | 0.786 86 | 0.559 90 | |

| SAFNet-seg | 0.654 70 | 0.752 64 | 0.734 77 | 0.664 90 | 0.583 81 | 0.815 66 | 0.399 91 | 0.754 65 | 0.639 35 | 0.535 71 | 0.942 81 | 0.470 64 | 0.309 32 | 0.665 43 | 0.539 93 | 0.650 71 | 0.708 80 | 0.635 43 | 0.857 87 | 0.793 78 | 0.642 59 | |

| Linqing Zhao, Jiwen Lu, Jie Zhou: Similarity-Aware Fusion Network for 3D Semantic Segmentation. IROS 2021 | ||||||||||||||||||||||

| PointSPNet | 0.637 77 | 0.734 72 | 0.692 93 | 0.714 74 | 0.576 83 | 0.797 79 | 0.446 72 | 0.743 69 | 0.598 56 | 0.437 99 | 0.942 81 | 0.403 93 | 0.150 108 | 0.626 56 | 0.800 55 | 0.649 72 | 0.697 84 | 0.557 81 | 0.846 90 | 0.777 90 | 0.563 88 | |

| DCM-Net | 0.658 67 | 0.778 50 | 0.702 85 | 0.806 46 | 0.619 69 | 0.813 70 | 0.468 60 | 0.693 83 | 0.494 90 | 0.524 75 | 0.941 83 | 0.449 76 | 0.298 37 | 0.510 89 | 0.821 47 | 0.675 62 | 0.727 74 | 0.568 75 | 0.826 94 | 0.803 69 | 0.637 61 | |

| Jonas Schult*, Francis Engelmann*, Theodora Kontogianni, Bastian Leibe: DualConvMesh-Net: Joint Geodesic and Euclidean Convolutions on 3D Meshes. CVPR 2020 [Oral] | ||||||||||||||||||||||

| PCNN | 0.498 109 | 0.559 108 | 0.644 106 | 0.560 109 | 0.420 109 | 0.711 105 | 0.229 118 | 0.414 111 | 0.436 106 | 0.352 110 | 0.941 83 | 0.324 107 | 0.155 106 | 0.238 116 | 0.387 108 | 0.493 105 | 0.529 115 | 0.509 97 | 0.813 97 | 0.751 99 | 0.504 103 | |

| LAP-D | 0.594 95 | 0.720 81 | 0.692 93 | 0.637 100 | 0.456 105 | 0.773 91 | 0.391 97 | 0.730 73 | 0.587 60 | 0.445 98 | 0.940 85 | 0.381 98 | 0.288 43 | 0.434 102 | 0.453 103 | 0.591 94 | 0.649 99 | 0.581 70 | 0.777 100 | 0.749 100 | 0.610 69 | |

| DPC | 0.592 96 | 0.720 81 | 0.700 87 | 0.602 105 | 0.480 101 | 0.762 96 | 0.380 100 | 0.713 79 | 0.585 63 | 0.437 99 | 0.940 85 | 0.369 100 | 0.288 43 | 0.434 102 | 0.509 99 | 0.590 96 | 0.639 104 | 0.567 76 | 0.772 101 | 0.755 98 | 0.592 79 | |

| Francis Engelmann, Theodora Kontogianni, Bastian Leibe: Dilated Point Convolutions: On the Receptive Field Size of Point Convolutions on 3D Point Clouds. ICRA 2020 | ||||||||||||||||||||||

| DenSeR | 0.628 86 | 0.800 42 | 0.625 108 | 0.719 72 | 0.545 92 | 0.806 73 | 0.445 74 | 0.597 98 | 0.448 104 | 0.519 79 | 0.938 87 | 0.481 59 | 0.328 25 | 0.489 95 | 0.499 100 | 0.657 70 | 0.759 58 | 0.592 64 | 0.881 65 | 0.797 74 | 0.634 62 | |

| MVPNet | 0.641 72 | 0.831 33 | 0.715 80 | 0.671 87 | 0.590 77 | 0.781 86 | 0.394 93 | 0.679 85 | 0.642 33 | 0.553 64 | 0.937 88 | 0.462 67 | 0.256 65 | 0.649 46 | 0.406 106 | 0.626 83 | 0.691 87 | 0.666 33 | 0.877 69 | 0.792 81 | 0.608 70 | |

| Maximilian Jaritz, Jiayuan Gu, Hao Su: Multi-view PointNet for 3D Scene Understanding. GMDL Workshop, ICCV 2019 | ||||||||||||||||||||||

| DGCNN_reproduce | 0.446 113 | 0.474 117 | 0.623 109 | 0.463 116 | 0.366 113 | 0.651 111 | 0.310 107 | 0.389 114 | 0.349 116 | 0.330 111 | 0.937 88 | 0.271 113 | 0.126 111 | 0.285 112 | 0.224 115 | 0.350 118 | 0.577 108 | 0.445 112 | 0.625 114 | 0.723 105 | 0.394 114 | |

| Yue Wang, Yongbin Sun, Ziwei Liu, Sanjay E. Sarma, Michael M. Bronstein, Justin M. Solomon: Dynamic Graph CNN for Learning on Point Clouds. TOG 2019 | ||||||||||||||||||||||

| ROSMRF | 0.580 98 | 0.772 55 | 0.707 83 | 0.681 83 | 0.563 87 | 0.764 94 | 0.362 102 | 0.515 109 | 0.465 100 | 0.465 93 | 0.936 90 | 0.427 86 | 0.207 84 | 0.438 100 | 0.577 90 | 0.536 102 | 0.675 93 | 0.486 104 | 0.723 107 | 0.779 88 | 0.524 99 | |

| JSENet | 0.699 49 | 0.881 21 | 0.762 57 | 0.821 33 | 0.667 53 | 0.800 77 | 0.522 33 | 0.792 56 | 0.613 44 | 0.607 48 | 0.935 91 | 0.492 54 | 0.205 86 | 0.576 69 | 0.853 37 | 0.691 56 | 0.758 59 | 0.652 36 | 0.872 77 | 0.828 53 | 0.649 56 | |

| Zeyu HU, Mingmin Zhen, Xuyang BAI, Hongbo Fu, Chiew-lan Tai: JSENet: Joint Semantic Segmentation and Edge Detection Network for 3D Point Clouds. ECCV 2020 | ||||||||||||||||||||||

| KP-FCNN | 0.684 55 | 0.847 29 | 0.758 61 | 0.784 56 | 0.647 60 | 0.814 67 | 0.473 57 | 0.772 59 | 0.605 50 | 0.594 56 | 0.935 91 | 0.450 75 | 0.181 97 | 0.587 65 | 0.805 52 | 0.690 57 | 0.785 40 | 0.614 52 | 0.882 64 | 0.819 60 | 0.632 63 | |

| H. Thomas, C. Qi, J. Deschaud, B. Marcotegui, F. Goulette, L. Guibas.: KPConv: Flexible and Deformable Convolution for Point Clouds. ICCV 2019 | ||||||||||||||||||||||

| SPH3D-GCN | 0.610 92 | 0.858 27 | 0.772 51 | 0.489 114 | 0.532 94 | 0.792 83 | 0.404 90 | 0.643 94 | 0.570 71 | 0.507 83 | 0.935 91 | 0.414 91 | 0.046 118 | 0.510 89 | 0.702 72 | 0.602 90 | 0.705 82 | 0.549 86 | 0.859 86 | 0.773 92 | 0.534 97 | |

| Huan Lei, Naveed Akhtar, and Ajmal Mian: Spherical Kernel for Efficient Graph Convolution on 3D Point Clouds. TPAMI 2020 | ||||||||||||||||||||||

| TextureNet | 0.566 101 | 0.672 93 | 0.664 101 | 0.671 87 | 0.494 99 | 0.719 103 | 0.445 74 | 0.678 86 | 0.411 110 | 0.396 104 | 0.935 91 | 0.356 102 | 0.225 76 | 0.412 104 | 0.535 94 | 0.565 100 | 0.636 105 | 0.464 107 | 0.794 99 | 0.680 111 | 0.568 86 | |

| Jingwei Huang, Haotian Zhang, Li Yi, Thomas Funkerhouser, Matthias Niessner, Leonidas Guibas: TextureNet: Consistent Local Parametrizations for Learning from High-Resolution Signals on Meshes. CVPR | ||||||||||||||||||||||

| PointContrast_LA_SEM | 0.683 58 | 0.757 63 | 0.784 45 | 0.786 54 | 0.639 64 | 0.824 55 | 0.408 87 | 0.775 58 | 0.604 52 | 0.541 67 | 0.934 95 | 0.532 41 | 0.269 59 | 0.552 79 | 0.777 57 | 0.645 78 | 0.793 34 | 0.640 41 | 0.913 42 | 0.824 55 | 0.671 49 | |

| wsss-transformer | 0.600 94 | 0.634 99 | 0.743 73 | 0.697 79 | 0.601 74 | 0.781 86 | 0.437 81 | 0.585 101 | 0.493 91 | 0.446 96 | 0.933 96 | 0.394 95 | 0.011 120 | 0.654 45 | 0.661 82 | 0.603 89 | 0.733 71 | 0.526 96 | 0.832 92 | 0.761 96 | 0.480 107 | |

| subcloud_weak | 0.516 106 | 0.676 91 | 0.591 115 | 0.609 102 | 0.442 106 | 0.774 90 | 0.335 105 | 0.597 98 | 0.422 109 | 0.357 109 | 0.932 97 | 0.341 105 | 0.094 114 | 0.298 111 | 0.528 97 | 0.473 109 | 0.676 92 | 0.495 102 | 0.602 116 | 0.721 106 | 0.349 118 | |

| SegGroup_sem | 0.627 87 | 0.818 38 | 0.747 70 | 0.701 76 | 0.602 73 | 0.764 94 | 0.385 99 | 0.629 95 | 0.490 92 | 0.508 81 | 0.931 98 | 0.409 92 | 0.201 89 | 0.564 74 | 0.725 66 | 0.618 85 | 0.692 86 | 0.539 92 | 0.873 75 | 0.794 76 | 0.548 94 | |

| An Tao, Yueqi Duan, Yi Wei, Jiwen Lu, Jie Zhou: SegGroup: Seg-Level Supervision for 3D Instance and Semantic Segmentation. TIP 2022 | ||||||||||||||||||||||

| Supervoxel-CNN | 0.635 79 | 0.656 95 | 0.711 81 | 0.719 72 | 0.613 70 | 0.757 97 | 0.444 77 | 0.765 61 | 0.534 79 | 0.566 61 | 0.928 99 | 0.478 61 | 0.272 55 | 0.636 51 | 0.531 95 | 0.664 66 | 0.645 101 | 0.508 99 | 0.864 84 | 0.792 81 | 0.611 67 | |

| SPLAT Net | 0.393 118 | 0.472 118 | 0.511 119 | 0.606 103 | 0.311 119 | 0.656 109 | 0.245 117 | 0.405 112 | 0.328 118 | 0.197 121 | 0.927 100 | 0.227 119 | 0.000 123 | 0.001 124 | 0.249 113 | 0.271 121 | 0.510 116 | 0.383 119 | 0.593 117 | 0.699 109 | 0.267 120 | |

| Hang Su, Varun Jampani, Deqing Sun, Subhransu Maji, Evangelos Kalogerakis, Ming-Hsuan Yang, Jan Kautz: SPLATNet: Sparse Lattice Networks for Point Cloud Processing. CVPR 2018 | ||||||||||||||||||||||

| SALANet | 0.670 62 | 0.816 39 | 0.770 54 | 0.768 61 | 0.652 58 | 0.807 72 | 0.451 67 | 0.747 67 | 0.659 28 | 0.545 66 | 0.924 101 | 0.473 63 | 0.149 109 | 0.571 72 | 0.811 51 | 0.635 82 | 0.746 66 | 0.623 49 | 0.892 57 | 0.794 76 | 0.570 84 | |

| SurfaceConvPF | 0.442 114 | 0.505 113 | 0.622 110 | 0.380 121 | 0.342 116 | 0.654 110 | 0.227 119 | 0.397 113 | 0.367 114 | 0.276 116 | 0.924 101 | 0.240 117 | 0.198 91 | 0.359 108 | 0.262 112 | 0.366 115 | 0.581 107 | 0.435 114 | 0.640 113 | 0.668 112 | 0.398 113 | |

| Hao Pan, Shilin Liu, Yang Liu, Xin Tong: Convolutional Neural Networks on 3D Surfaces Using Parallel Frames. | ||||||||||||||||||||||

| CCRFNet | 0.589 97 | 0.766 60 | 0.659 103 | 0.683 82 | 0.470 104 | 0.740 101 | 0.387 98 | 0.620 97 | 0.490 92 | 0.476 89 | 0.922 103 | 0.355 103 | 0.245 70 | 0.511 88 | 0.511 98 | 0.571 99 | 0.643 102 | 0.493 103 | 0.872 77 | 0.762 95 | 0.600 75 | |

| FCPN | 0.447 112 | 0.679 90 | 0.604 114 | 0.578 108 | 0.380 111 | 0.682 108 | 0.291 112 | 0.106 122 | 0.483 95 | 0.258 120 | 0.920 104 | 0.258 115 | 0.025 119 | 0.231 118 | 0.325 110 | 0.480 108 | 0.560 111 | 0.463 108 | 0.725 106 | 0.666 113 | 0.231 122 | |

| Dario Rethage, Johanna Wald, Jürgen Sturm, Nassir Navab, Federico Tombari: Fully-Convolutional Point Networks for Large-Scale Point Clouds. ECCV 2018 | ||||||||||||||||||||||

| FusionAwareConv | 0.630 85 | 0.604 105 | 0.741 75 | 0.766 63 | 0.590 77 | 0.747 99 | 0.501 43 | 0.734 72 | 0.503 89 | 0.527 73 | 0.919 105 | 0.454 71 | 0.323 27 | 0.550 81 | 0.420 105 | 0.678 61 | 0.688 88 | 0.544 88 | 0.896 53 | 0.795 75 | 0.627 65 | |

| Jiazhao Zhang, Chenyang Zhu, Lintao Zheng, Kai Xu: Fusion-Aware Point Convolution for Online Semantic 3D Scene Segmentation. CVPR 2020 | ||||||||||||||||||||||

| Tangent Convolutions | 0.438 116 | 0.437 119 | 0.646 105 | 0.474 115 | 0.369 112 | 0.645 112 | 0.353 103 | 0.258 119 | 0.282 121 | 0.279 115 | 0.918 106 | 0.298 110 | 0.147 110 | 0.283 113 | 0.294 111 | 0.487 106 | 0.562 110 | 0.427 115 | 0.619 115 | 0.633 116 | 0.352 117 | |

| Maxim Tatarchenko, Jaesik Park, Vladlen Koltun, Qian-Yi Zhou: Tangent convolutions for dense prediction in 3d. CVPR 2018 | ||||||||||||||||||||||

| 3DMV, FTSDF | 0.501 108 | 0.558 109 | 0.608 113 | 0.424 120 | 0.478 102 | 0.690 106 | 0.246 116 | 0.586 100 | 0.468 98 | 0.450 95 | 0.911 107 | 0.394 95 | 0.160 105 | 0.438 100 | 0.212 116 | 0.432 112 | 0.541 114 | 0.475 106 | 0.742 104 | 0.727 104 | 0.477 108 | |

| SSC-UNet | 0.308 122 | 0.353 120 | 0.290 123 | 0.278 123 | 0.166 122 | 0.553 120 | 0.169 122 | 0.286 118 | 0.147 123 | 0.148 123 | 0.908 108 | 0.182 121 | 0.064 117 | 0.023 123 | 0.018 123 | 0.354 117 | 0.363 121 | 0.345 121 | 0.546 120 | 0.685 110 | 0.278 119 | |

| DVVNet | 0.562 102 | 0.648 96 | 0.700 87 | 0.770 60 | 0.586 80 | 0.687 107 | 0.333 106 | 0.650 91 | 0.514 86 | 0.475 90 | 0.906 109 | 0.359 101 | 0.223 78 | 0.340 109 | 0.442 104 | 0.422 113 | 0.668 95 | 0.501 100 | 0.708 108 | 0.779 88 | 0.534 97 | |

| ScanNet+FTSDF | 0.383 119 | 0.297 121 | 0.491 120 | 0.432 119 | 0.358 115 | 0.612 117 | 0.274 114 | 0.116 121 | 0.411 110 | 0.265 117 | 0.904 110 | 0.229 118 | 0.079 116 | 0.250 114 | 0.185 119 | 0.320 119 | 0.510 116 | 0.385 118 | 0.548 118 | 0.597 121 | 0.394 114 | |

| GMLPs | 0.538 104 | 0.495 114 | 0.693 92 | 0.647 96 | 0.471 103 | 0.793 81 | 0.300 109 | 0.477 110 | 0.505 88 | 0.358 108 | 0.903 111 | 0.327 106 | 0.081 115 | 0.472 97 | 0.529 96 | 0.448 111 | 0.710 77 | 0.509 97 | 0.746 103 | 0.737 102 | 0.554 93 | |

| SQN_0.1% | 0.569 100 | 0.676 91 | 0.696 90 | 0.657 91 | 0.497 98 | 0.779 89 | 0.424 83 | 0.548 105 | 0.515 85 | 0.376 106 | 0.902 112 | 0.422 88 | 0.357 10 | 0.379 107 | 0.456 102 | 0.596 93 | 0.659 97 | 0.544 88 | 0.685 110 | 0.665 114 | 0.556 92 | |

| MVF-GNN | 0.658 67 | 0.558 109 | 0.751 66 | 0.655 92 | 0.690 45 | 0.722 102 | 0.453 66 | 0.867 24 | 0.579 65 | 0.576 59 | 0.893 113 | 0.523 44 | 0.293 40 | 0.733 35 | 0.571 91 | 0.692 54 | 0.659 97 | 0.606 56 | 0.875 71 | 0.804 68 | 0.668 50 | |

| PNET2 | 0.442 114 | 0.548 111 | 0.548 117 | 0.597 106 | 0.363 114 | 0.628 116 | 0.300 109 | 0.292 117 | 0.374 113 | 0.307 113 | 0.881 114 | 0.268 114 | 0.186 95 | 0.238 116 | 0.204 118 | 0.407 114 | 0.506 119 | 0.449 110 | 0.667 112 | 0.620 117 | 0.462 112 | |

| SD-DETR | 0.576 99 | 0.746 66 | 0.609 112 | 0.445 118 | 0.517 97 | 0.643 113 | 0.366 101 | 0.714 78 | 0.456 102 | 0.468 92 | 0.870 115 | 0.432 80 | 0.264 62 | 0.558 77 | 0.674 76 | 0.586 97 | 0.688 88 | 0.482 105 | 0.739 105 | 0.733 103 | 0.537 96 | |

| 3DSM_DMMF | 0.631 82 | 0.626 100 | 0.745 71 | 0.801 49 | 0.607 71 | 0.751 98 | 0.506 40 | 0.729 74 | 0.565 72 | 0.491 86 | 0.866 116 | 0.434 79 | 0.197 92 | 0.595 63 | 0.630 84 | 0.709 46 | 0.705 82 | 0.560 78 | 0.875 71 | 0.740 101 | 0.491 105 | |

| GrowSP++ | 0.323 121 | 0.114 123 | 0.589 116 | 0.499 112 | 0.147 123 | 0.555 119 | 0.290 113 | 0.336 116 | 0.290 120 | 0.262 118 | 0.865 117 | 0.102 123 | 0.000 123 | 0.037 122 | 0.000 124 | 0.000 124 | 0.462 120 | 0.381 120 | 0.389 122 | 0.664 115 | 0.473 110 | |

| PanopticFusion-label | 0.529 105 | 0.491 115 | 0.688 96 | 0.604 104 | 0.386 110 | 0.632 114 | 0.225 120 | 0.705 82 | 0.434 107 | 0.293 114 | 0.815 118 | 0.348 104 | 0.241 71 | 0.499 92 | 0.669 78 | 0.507 104 | 0.649 99 | 0.442 113 | 0.796 98 | 0.602 118 | 0.561 89 | |

| Gaku Narita, Takashi Seno, Tomoya Ishikawa, Yohsuke Kaji: PanopticFusion: Online Volumetric Semantic Mapping at the Level of Stuff and Things. IROS 2019 (to appear) | ||||||||||||||||||||||

| 3DMV | 0.484 110 | 0.484 116 | 0.538 118 | 0.643 98 | 0.424 108 | 0.606 118 | 0.310 107 | 0.574 102 | 0.433 108 | 0.378 105 | 0.796 119 | 0.301 109 | 0.214 82 | 0.537 84 | 0.208 117 | 0.472 110 | 0.507 118 | 0.413 116 | 0.693 109 | 0.602 118 | 0.539 95 | |

| Angela Dai, Matthias Niessner: 3DMV: Joint 3D-Multi-View Prediction for 3D Semantic Scene Segmentation. ECCV'18 | ||||||||||||||||||||||

| ScanNet | 0.306 123 | 0.203 122 | 0.366 122 | 0.501 111 | 0.311 119 | 0.524 121 | 0.211 121 | 0.002 124 | 0.342 117 | 0.189 122 | 0.786 120 | 0.145 122 | 0.102 113 | 0.245 115 | 0.152 120 | 0.318 120 | 0.348 122 | 0.300 122 | 0.460 121 | 0.437 123 | 0.182 123 | |

| Angela Dai, Angel X. Chang, Manolis Savva, Maciej Halber, Thomas Funkhouser, Matthias Nießner: ScanNet: Richly-annotated 3D Reconstructions of Indoor Scenes. CVPR'17 | ||||||||||||||||||||||

| Online SegFusion | 0.515 107 | 0.607 104 | 0.644 106 | 0.579 107 | 0.434 107 | 0.630 115 | 0.353 103 | 0.628 96 | 0.440 105 | 0.410 102 | 0.762 121 | 0.307 108 | 0.167 102 | 0.520 86 | 0.403 107 | 0.516 103 | 0.565 109 | 0.447 111 | 0.678 111 | 0.701 108 | 0.514 101 | |

| Davide Menini, Suryansh Kumar, Martin R. Oswald, Erik Sandstroem, Cristian Sminchisescu, Luc van Gool: A Real-Time Learning Framework for Joint 3D Reconstruction and Semantic Segmentation. Robotics and Automation Letters Submission | ||||||||||||||||||||||

| PointNet++ | 0.339 120 | 0.584 106 | 0.478 121 | 0.458 117 | 0.256 121 | 0.360 123 | 0.250 115 | 0.247 120 | 0.278 122 | 0.261 119 | 0.677 122 | 0.183 120 | 0.117 112 | 0.212 120 | 0.145 121 | 0.364 116 | 0.346 123 | 0.232 123 | 0.548 118 | 0.523 122 | 0.252 121 | |

| Charles R. Qi, Li Yi, Hao Su, Leonidas J. Guibas: pointnet++: deep hierarchical feature learning on point sets in a metric space. | ||||||||||||||||||||||

| 3DWSSS | 0.425 117 | 0.525 112 | 0.647 104 | 0.522 110 | 0.324 117 | 0.488 122 | 0.077 123 | 0.712 80 | 0.353 115 | 0.401 103 | 0.636 123 | 0.281 112 | 0.176 98 | 0.340 109 | 0.565 92 | 0.175 122 | 0.551 112 | 0.398 117 | 0.370 123 | 0.602 118 | 0.361 116 | |

| ERROR | 0.054 124 | 0.000 124 | 0.041 124 | 0.172 124 | 0.030 124 | 0.062 124 | 0.001 124 | 0.035 123 | 0.004 124 | 0.051 124 | 0.143 124 | 0.019 124 | 0.003 122 | 0.041 121 | 0.050 122 | 0.003 123 | 0.054 124 | 0.018 124 | 0.005 124 | 0.264 124 | 0.082 124 | |