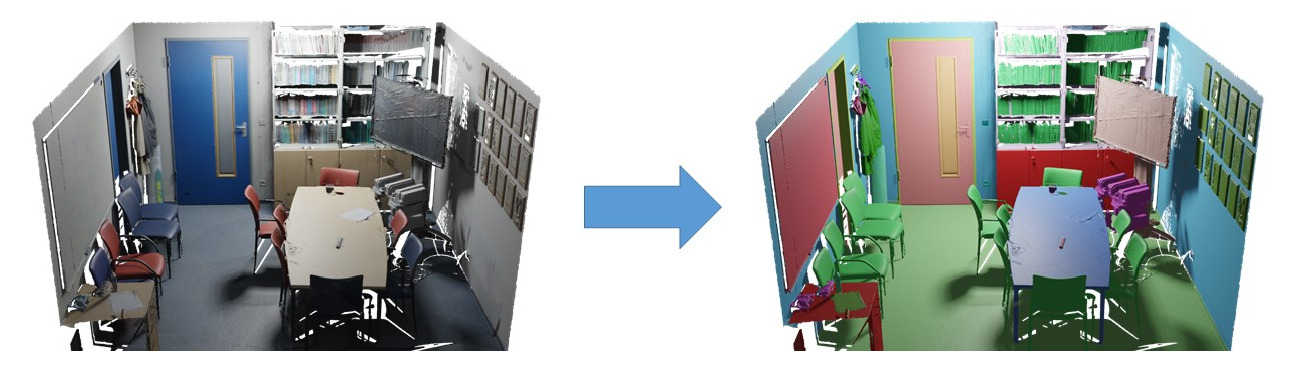

3D Semantic Segmentation

This task involves predicting a semantic labeling of a 3D scan mesh which is obtained from the laser scanner point cloud. Submissions must provide a semantic label for each vertex of the 3D mesh.

Evaluation and Metrics

The mesh surface is manually annotated with long-tail labels, of which we pick the top 100 classes.

We rank methods similar to the ScanNet intersection-over-union metric (IoU). IoU = TP/(TP+FP+FN), where TP, FP, and FN are the numbers of true positive, false positive, and false negative 3D vertices, respectively.

Predicted labels are evaluated per-vertex over the vertices of 5% decimated 3D scan mesh (mesh_aligned_0.05.ply); for 3D approaches that operate on other representations like grids or points, the predicted labels should be mapped onto the mesh vertices.

Evaluation excludes the vertices which are anonymized. The list of these vertices is in mesh_aligned_0.05_mask.txt

Multilabel Evaluation

The ScanNet++ ground truth contains multilabels; vertices that have more than one label in ambiguous cases. Hence, we evaluate the Top-1 and Top-3 performance of methods.

Submissions may either contain a single prediction per-vertex, or 3 predictions per-vertex.

Top-1 evaluation considers the top prediction for each vertex, and considers a prediction correct if it matches any ground truth label for that vertex.

Top-3 evaluation considers the top 3 predictions for each vertex, and considers a prediction correct if any of the top 3 predictions matches the ground truth. For multilabeled vertices, all labels in the ground truth must be present in the top 3 predictions for the prediction to be considered correct. Submissions with a single prediction per-vertex will be evaluated as if they had 3 predictions per-vertex, with the same prediction repeated 3 times.

Results

The benchmark is currently evaluated on the v2 version of the dataset.| Methods | MIOU | AIR VENT | BACKPACK | BAG | BASKET | BED | BINDER | BLANKET | BLIND RAIL | BLINDS | BOARD | BOOK | BOOKSHELF | BOTTLE | BOWL | BOX | BUCKET | CABINET | CEILING | CEILING LAMP | CHAIR | CLOCK | CLOTH | CLOTHES HANGER | COAT HANGER | COMPUTER TOWER | CONTAINER | CRATE | CUP | CURTAIN | CUSHION | CUTTING BOARD | DOOR | DOORFRAME | ELECTRICAL DUCT | EXHAUST FAN | FILE FOLDER | FLOOR | HEADPHONES | HEATER | JACKET | JAR | KETTLE | KEYBOARD | KITCHEN CABINET | KITCHEN COUNTER | LAPTOP | LIGHT SWITCH | MARKER | MICROWAVE | MONITOR | MOUSE | OFFICE CHAIR | PAINTING | PAN | PAPER | PAPER BAG | PAPER TOWEL | PICTURE | PILLOW | PIPE | PLANT | PLANT POT | POSTER | POT | POWER STRIP | PRINTER | RACK | REFRIGERATOR | SHELF | SHOE RACK | SHOES | SHOWER WALL | SINK | SLIPPERS | SMOKE DETECTOR | SOAP DISPENSER | SOCKET | SOFA | SPEAKER | SPRAY BOTTLE | STAPLER | STORAGE CABINET | SUITCASE | TABLE | TABLE LAMP | TAP | TELEPHONE | TISSUE BOX | TOILET | TOILET BRUSH | TOILET PAPER | TOWEL | TRASH CAN | TV | WALL | WHITEBOARD | WHITEBOARD ERASER | WINDOW | WINDOW FRAME | WINDOWSILL |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SpUNet | 0.456 | 0.055 | 0.461 | 0.256 | 0.146 | 0.747 | 0.005 | 0.615 | 0.519 | 0.913 | 0.000 | 0.128 | 0.563 | 0.429 | 0.001 | 0.452 | 0.256 | 0.364 | 0.917 | 0.906 | 0.749 | 0.740 | 0.051 | 0.068 | 0.299 | 0.559 | 0.002 | 0.225 | 0.520 | 0.854 | 0.087 | 0.056 | 0.729 | 0.388 | 0.308 | 0.548 | 0.000 | 0.930 | 0.419 | 0.816 | 0.683 | 0.098 | 0.753 | 0.818 | 0.539 | 0.244 | 0.654 | 0.470 | 0.000 | 0.899 | 0.894 | 0.761 | 0.831 | 0.040 | 0.044 | 0.222 | 0.082 | 0.273 | 0.331 | 0.332 | 0.715 | 0.880 | 0.402 | 0.185 | 0.049 | 0.138 | 0.312 | 0.002 | 0.756 | 0.282 | 0.274 | 0.409 | 0.177 | 0.785 | 0.433 | 0.467 | 0.703 | 0.190 | 0.833 | 0.221 | 0.325 | 0.072 | 0.484 | 0.383 | 0.768 | 0.648 | 0.608 | 0.740 | 0.471 | 0.910 | 0.625 | 0.272 | 0.553 | 0.788 | 0.963 | 0.840 | 0.772 | 0.524 | 0.571 | 0.367 | 0.666 |

| . https://github.com/traveller59/spconv. https://github.com/traveller59/spconv | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| DITR | 0.525 | 0.171 | 0.563 | 0.492 | 0.218 | 0.835 | 0.012 | 0.722 | 0.561 | 0.903 | 0.093 | 0.503 | 0.529 | 0.554 | 0.268 | 0.601 | 0.453 | 0.339 | 0.913 | 0.939 | 0.800 | 0.737 | 0.077 | 0.130 | 0.256 | 0.656 | 0.000 | 0.826 | 0.620 | 0.837 | 0.000 | 0.333 | 0.708 | 0.444 | 0.235 | 0.558 | 0.002 | 0.944 | 0.570 | 0.823 | 0.712 | 0.235 | 0.786 | 0.879 | 0.609 | 0.194 | 0.785 | 0.452 | 0.000 | 0.901 | 0.888 | 0.782 | 0.893 | 0.000 | 0.495 | 0.314 | 0.114 | 0.301 | 0.565 | 0.321 | 0.638 | 0.918 | 0.650 | 0.365 | 0.023 | 0.529 | 0.532 | 0.000 | 0.860 | 0.227 | 0.277 | 0.426 | 0.404 | 0.742 | 0.490 | 0.468 | 0.602 | 0.486 | 0.811 | 0.546 | 0.399 | 0.110 | 0.410 | 0.708 | 0.801 | 0.691 | 0.524 | 0.726 | 0.534 | 0.872 | 0.651 | 0.307 | 0.678 | 0.862 | 0.966 | 0.859 | 0.820 | 0.472 | 0.635 | 0.437 | 0.632 |

| Karim Abou Zeid, Kadir Yilmaz, Daan de Geus, Alexander Hermans, David Adrian, Timm Linder, Bastian Leibe. DINO in the Room: Leveraging 2D Foundation Models for 3D Segmentation. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| CAC | 0.483 | 0.074 | 0.557 | 0.499 | 0.152 | 0.825 | 0.000 | 0.734 | 0.471 | 0.888 | 0.000 | 0.188 | 0.527 | 0.420 | 0.324 | 0.509 | 0.512 | 0.348 | 0.918 | 0.934 | 0.783 | 0.735 | 0.053 | 0.069 | 0.397 | 0.523 | 0.077 | 0.251 | 0.508 | 0.811 | 0.062 | 0.228 | 0.731 | 0.428 | 0.479 | 0.390 | 0.000 | 0.943 | 0.501 | 0.851 | 0.700 | 0.135 | 0.736 | 0.803 | 0.531 | 0.203 | 0.853 | 0.420 | 0.000 | 0.690 | 0.893 | 0.776 | 0.880 | 0.000 | 0.192 | 0.153 | 0.000 | 0.304 | 0.561 | 0.345 | 0.790 | 0.907 | 0.599 | 0.480 | 0.000 | 0.420 | 0.347 | 0.000 | 0.593 | 0.205 | 0.265 | 0.433 | 0.367 | 0.772 | 0.591 | 0.367 | 0.701 | 0.354 | 0.795 | 0.310 | 0.000 | 0.069 | 0.472 | 0.419 | 0.770 | 0.699 | 0.503 | 0.586 | 0.559 | 0.891 | 0.668 | 0.311 | 0.621 | 0.811 | 0.983 | 0.855 | 0.806 | 0.408 | 0.638 | 0.394 | 0.645 |

| Zhuotao Tian, Jiequan Cui, Li Jiang, Xiaojuan Qi, Xin Lai, Yixin Chen, Shu Liu, Jiaya Jia. Learning Context-aware Classifier for Semantic Segmentation. AAAI 2023. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Sonata - PPT - PTv3 | 0.495 | 0.051 | 0.538 | 0.343 | 0.122 | 0.815 | 0.287 | 0.686 | 0.665 | 0.891 | 0.014 | 0.513 | 0.544 | 0.424 | 0.031 | 0.497 | 0.404 | 0.429 | 0.923 | 0.945 | 0.708 | 0.734 | 0.000 | 0.070 | 0.012 | 0.629 | 0.000 | 0.753 | 0.455 | 0.820 | 0.000 | 0.197 | 0.784 | 0.427 | 0.337 | 0.617 | 0.000 | 0.946 | 0.533 | 0.857 | 0.729 | 0.068 | 0.611 | 0.828 | 0.590 | 0.294 | 0.719 | 0.466 | 0.000 | 0.927 | 0.887 | 0.740 | 0.775 | 0.145 | 0.069 | 0.291 | 0.016 | 0.262 | 0.621 | 0.465 | 0.759 | 0.891 | 0.674 | 0.323 | 0.000 | 0.432 | 0.454 | 0.000 | 0.627 | 0.246 | 0.272 | 0.411 | 0.273 | 0.778 | 0.457 | 0.460 | 0.728 | 0.480 | 0.753 | 0.282 | 0.000 | 0.073 | 0.654 | 0.575 | 0.805 | 0.714 | 0.550 | 0.773 | 0.375 | 0.969 | 0.668 | 0.269 | 0.658 | 0.834 | 0.991 | 0.859 | 0.831 | 0.342 | 0.666 | 0.467 | 0.665 |

| Xiaoyang Wu, Daniel DeTone, Duncan Frost, Tianwei Shen, Chris Xie, Nan Yang, Jakob Engel, Richard Newcombe, Hengshuang Zhao, Julian Straub. Sonata: Self-Supervised Learning of Reliable Point Representations. CVPR 2025 Hightlight | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| PTv3 | 0.488 | 0.063 | 0.656 | 0.380 | 0.189 | 0.777 | 0.000 | 0.672 | 0.533 | 0.891 | 0.000 | 0.239 | 0.521 | 0.482 | 0.116 | 0.524 | 0.379 | 0.389 | 0.919 | 0.911 | 0.759 | 0.733 | 0.067 | 0.056 | 0.214 | 0.550 | 0.103 | 0.553 | 0.486 | 0.815 | 0.000 | 0.228 | 0.720 | 0.427 | 0.409 | 0.641 | 0.078 | 0.925 | 0.479 | 0.853 | 0.728 | 0.148 | 0.790 | 0.823 | 0.634 | 0.204 | 0.823 | 0.438 | 0.000 | 0.884 | 0.871 | 0.758 | 0.869 | 0.000 | 0.082 | 0.274 | 0.024 | 0.296 | 0.471 | 0.317 | 0.792 | 0.838 | 0.560 | 0.393 | 0.039 | 0.458 | 0.452 | 0.000 | 0.804 | 0.247 | 0.251 | 0.405 | 0.225 | 0.778 | 0.350 | 0.511 | 0.637 | 0.125 | 0.808 | 0.364 | 0.194 | 0.071 | 0.624 | 0.594 | 0.771 | 0.703 | 0.514 | 0.789 | 0.420 | 0.903 | 0.682 | 0.241 | 0.634 | 0.827 | 0.920 | 0.847 | 0.798 | 0.342 | 0.624 | 0.408 | 0.645 |

| Xiaoyang Wu, Li Jiang, Peng-Shuai Wang, Zhijian Liu, Xihui Liu, Yu Qiao, Wanli Ouyang, Tong He, Hengshuang Zhao. Point Transformer V3: Simpler Faster Stronger. CVPR 2024 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| OACNN | 0.470 | 0.149 | 0.491 | 0.325 | 0.125 | 0.805 | 0.000 | 0.652 | 0.554 | 0.859 | 0.000 | 0.154 | 0.559 | 0.480 | 0.107 | 0.508 | 0.237 | 0.376 | 0.909 | 0.904 | 0.759 | 0.725 | 0.021 | 0.082 | 0.269 | 0.555 | 0.000 | 0.698 | 0.519 | 0.760 | 0.054 | 0.226 | 0.699 | 0.471 | 0.283 | 0.676 | 0.002 | 0.933 | 0.433 | 0.836 | 0.607 | 0.109 | 0.627 | 0.822 | 0.540 | 0.320 | 0.520 | 0.484 | 0.003 | 0.884 | 0.827 | 0.742 | 0.859 | 0.006 | 0.041 | 0.199 | 0.012 | 0.264 | 0.470 | 0.399 | 0.826 | 0.911 | 0.414 | 0.255 | 0.000 | 0.326 | 0.287 | 0.000 | 0.783 | 0.244 | 0.244 | 0.410 | 0.169 | 0.783 | 0.480 | 0.459 | 0.697 | 0.199 | 0.796 | 0.310 | 0.132 | 0.057 | 0.522 | 0.550 | 0.781 | 0.649 | 0.612 | 0.794 | 0.488 | 0.903 | 0.697 | 0.270 | 0.650 | 0.823 | 0.819 | 0.839 | 0.780 | 0.472 | 0.617 | 0.396 | 0.655 |

| Bohao Peng, Xiaoyang Wu, Li Jiang, Yukang Chen, Hengshuang Zhao, Zhuotao Tian, Jiaya Jia. OA-CNNs: Omni-Adaptive Sparse CNNs for 3D Semantic Segmentation. CVPR 2024 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| OctFormer | 0.460 | 0.075 | 0.509 | 0.333 | 0.121 | 0.818 | 0.000 | 0.664 | 0.578 | 0.883 | 0.001 | 0.115 | 0.546 | 0.378 | 0.084 | 0.454 | 0.364 | 0.375 | 0.913 | 0.891 | 0.735 | 0.712 | 0.050 | 0.064 | 0.099 | 0.509 | 0.000 | 0.836 | 0.451 | 0.804 | 0.000 | 0.182 | 0.752 | 0.437 | 0.238 | 0.397 | 0.060 | 0.931 | 0.359 | 0.845 | 0.684 | 0.083 | 0.558 | 0.803 | 0.492 | 0.353 | 0.748 | 0.473 | 0.000 | 0.779 | 0.891 | 0.720 | 0.796 | 0.000 | 0.122 | 0.200 | 0.056 | 0.318 | 0.552 | 0.312 | 0.766 | 0.882 | 0.537 | 0.409 | 0.029 | 0.229 | 0.404 | 0.008 | 0.840 | 0.254 | 0.281 | 0.374 | 0.198 | 0.788 | 0.266 | 0.425 | 0.718 | 0.241 | 0.829 | 0.118 | 0.075 | 0.068 | 0.532 | 0.404 | 0.802 | 0.645 | 0.511 | 0.672 | 0.234 | 0.960 | 0.723 | 0.258 | 0.554 | 0.793 | 0.968 | 0.841 | 0.791 | 0.444 | 0.594 | 0.368 | 0.655 |

| Peng-Shuai Wang. OctFormer: Octree-based Transformers for 3D Point Clouds. SIGGRAPH 2023 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| PTv2 | 0.445 | 0.000 | 0.506 | 0.302 | 0.130 | 0.740 | 0.000 | 0.641 | 0.374 | 0.849 | 0.086 | 0.204 | 0.556 | 0.456 | 0.186 | 0.526 | 0.286 | 0.339 | 0.914 | 0.921 | 0.779 | 0.663 | 0.012 | 0.062 | 0.756 | 0.593 | 0.000 | 0.203 | 0.483 | 0.759 | 0.000 | 0.163 | 0.708 | 0.409 | 0.335 | 0.373 | 0.000 | 0.941 | 0.191 | 0.834 | 0.659 | 0.132 | 0.574 | 0.742 | 0.562 | 0.171 | 0.460 | 0.408 | 0.000 | 0.807 | 0.888 | 0.651 | 0.891 | 0.014 | 0.022 | 0.229 | 0.102 | 0.302 | 0.383 | 0.335 | 0.680 | 0.887 | 0.355 | 0.345 | 0.000 | 0.332 | 0.479 | 0.000 | 0.741 | 0.253 | 0.205 | 0.415 | 0.003 | 0.715 | 0.603 | 0.401 | 0.597 | 0.246 | 0.793 | 0.253 | 0.086 | 0.045 | 0.526 | 0.473 | 0.758 | 0.612 | 0.595 | 0.579 | 0.247 | 0.845 | 0.600 | 0.293 | 0.549 | 0.802 | 0.961 | 0.832 | 0.786 | 0.426 | 0.594 | 0.350 | 0.636 |

| Xiaoyang Wu, Yixing Lao, Li Jiang, Xihui Liu, Hengshuang Zhao. Xiaoyang Wu, Yixing Lao, Li Jiang, Xihui Liu, Hengshuang Zhao. NeurIPS 2022 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Please refer to the submission instructions before making a submission

Submit results